Description

OpenMeetings 2.1 or later is required to use clustering. One database is used for all OpenMeetings servers, so all database tables are shared across OM instances. Certain folders should be shared between all servers to allow access to the files/recording.

Configuration

- Multiple OM servers should be set up as described in Installation

- All servers should be configured to have same Time zone (To avoid Schedulers to drop user sessions as outdated)

- All servers should be configured to use the same DB

- Servers should be added in Administration -> Servers section

Database

All servers should be configured to use the same database. It can be on the server or on the one node of the cluster.

- Add users who can connect to the database remotely

- Update /opt/red5/webapps/openmeetings/WEB-INF/classes/META-INF/persistence.xml set correct server address, login and password. Also add the following section:

Instead of the 10.1.1.1 and 10.1.1.2 set semicolon separated IPs of all nodes of the cluster.

<property name="openjpa.RemoteCommitProvider" value="tcp(Addresses=10.1.1.1;10.1.1.2)" />

File systems

If files and recordings using the same physical folders the files and recordings will be available for each node. You can do this using Samba or NFS, for example. For using NFS do the following:

- Install NFS to the data server. In the file /etc/exports add the following lines:

Here 10.1.1.2 - is node ip for NFS remote access. Add these lines for all nodes except node with folders.

/opt/red5/webapps/openmeetings/upload 10.1.1.2(rw,sync,no_subtree_check,no_root_squash) /opt/red5/webapps/openmeetings/streams 10.1.1.2(rw,sync,no_subtree_check,no_root_squash)

- Install NFS common tools to other nodes. In the file /etc/fstab do the following:

Here 10.1.1.1 – data server ip. And run the command:

10.1.1.1:/opt/red5/webapps/openmeetings/upload/ /opt/red5/webapps/openmeetings/upload nfs timeo=50,hard,intr 10.1.1.1:/opt/red5/webapps/openmeetings/streams/ /opt/red5/webapps/openmeetings/streams nfs timeo=50,hard,intr

mount -a

OM nodes configuration

In the file /opt/red5/webapps/openmeetings/WEB-INF/classes/openmeetings-applicationContext.xml:

- For each node uncomment line:

and input the unique value for each node.

<!-- Need to be uncommented and set to the real ID if in cluster mode--> <property name="serverId" value="1" />

- Replace <ref bean="openmeetings.HashMapStore" /> <!-- Memory based session cache by default -->

with <ref bean="openmeetings.DatabaseStore" /> (Currently commented out with following comment: "The following section should be used in clustering mode")

Configuring cluster in Administration

Run red5 on each node. Login to the system as admin.

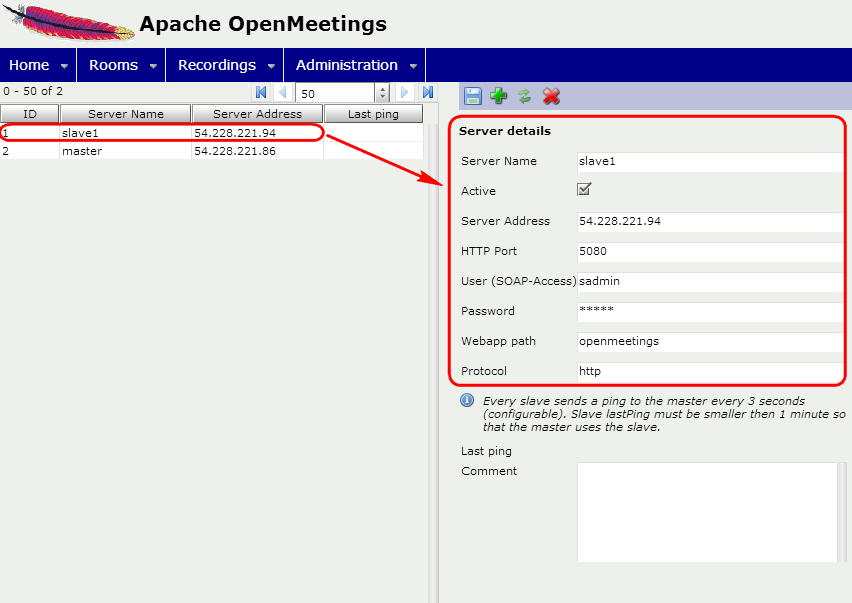

- Go to the Administration -> Users and create Webservice user (only access via SOAP).

- Go to the Administration -> Servers and add all cluster nodes with the following settings:

Server Name = node name; Active = check the checkbox. If the checkbox is checked it means node is active and you can use this node; Server Address = node ip; HTTP Port = 5080 - port for http part of Openmeetings; User (SOAP Access) = login of Webservice user from the previous step; Password = password of Webservise user; Webapp path = openmeetings - path where OM installed on this node, it would be better if this path will be the same for all nodes; Protocol = http.

Ensure everything works as expected

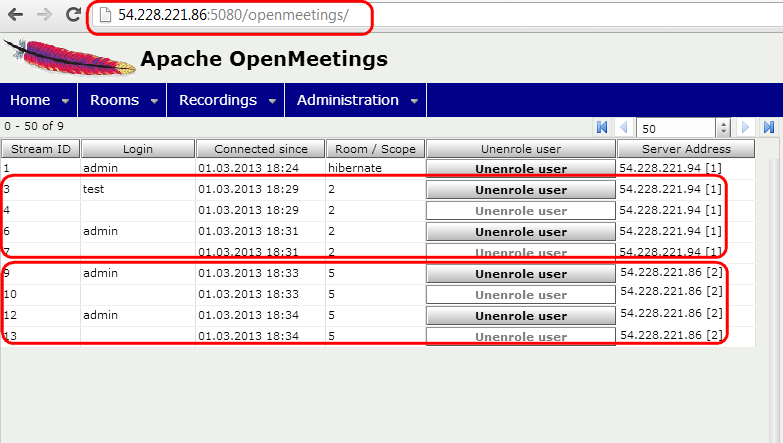

- Set up the cluster and loggin with two users, go to the same room (also check before room entering that the status page with the room list shows the correct number of participants before entering the room). You should login to the same server initially, the server will redirect you for the conference room to the appropriate server automatically. Both users should be in the same room.

- Do the same with only two users but go to _different_ rooms. The calculation should send both users to different servers, cause based on the calculation two different rooms on a cluster with two nodes should go exactly one room for each node. You can now loggin really to node1 and node2 of your cluster while those users are loggedin and go to Administration > Connections and check in the column "servers" where they are located. They should be on different server.