|

| virtual void | Init (int thread_id, int grp_id, int id) |

| |

|

void | Setup (const JobProto &job, shared_ptr< NeuralNet > train_net, shared_ptr< NeuralNet > valid_net, shared_ptr< NeuralNet > test_net) |

| | Setup members.

|

| |

| void | Run () |

| | Main function of Worker. More...

|

| |

| void | InitLocalParams () |

| | Init all local params (i.e., params from layers resident in this worker). More...

|

| |

| void | Checkpoint (int step, shared_ptr< NeuralNet > net) |

| | Checkpoint all params owned by the worker from the first group onto disk. More...

|

| |

| void | Test (int nsteps, Phase phase, shared_ptr< NeuralNet > net) |

| | Test the perforance of the learned model on validation or test dataset. More...

|

| |

| virtual void | TrainOneBatch (int step, Metric *perf)=0 |

| | Train one mini-batch. More...

|

| |

|

virtual void | TestOneBatch (int step, Phase phase, shared_ptr< NeuralNet > net, Metric *perf)=0 |

| | Test/validate one mini-batch.

|

| |

| void | Report (const string &prefix, const Metric &perf) |

| | Report performance to the stub. More...

|

| |

| int | Put (Param *param, int step) |

| | Put Param to server. More...

|

| |

| int | Get (Param *param, int step) |

| | Get Param with specific version from server If the current version >= the requested version, then return. More...

|

| |

| int | Update (Param *param, int step) |

| | Update Param. More...

|

| |

| int | Collect (Param *param, int step) |

| | Block until the param is updated since sending the update request. More...

|

| |

|

int | CollectAll (shared_ptr< NeuralNet > net, int step) |

| | Call Collect for every param of net.

|

| |

|

void | ReceiveBlobs (bool data, bool grad, BridgeLayer *layer, shared_ptr< NeuralNet > net) |

| | Receive blobs from other workers due to model partitions.

|

| |

|

void | SendBlobs (bool data, bool grad, BridgeLayer *layer, shared_ptr< NeuralNet > net) |

| | Send blobs to other workers due to model partitions.

|

| |

|

bool | DisplayNow (int step) const |

| | Check is it time to display training info, e.g., loss and precison.

|

| |

|

bool | DisplayDebugInfo (int step) const |

| | Check is it time to display training info, e.g., loss and precison.

|

| |

|

bool | StopNow (int step) const |

| | Check is it time to stop.

|

| |

|

bool | CheckpointNow (int step) const |

| | Check is it time to do checkpoint.

|

| |

| bool | TestNow (int step) const |

| | Check is it time to do test. More...

|

| |

| bool | ValidateNow (int step) const |

| | Check is it time to do validation. More...

|

| |

| int | grp_id () const |

| |

|

int | id () const |

| | worker ID within the worker group.

|

| |

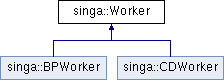

The Worker class which runs the training algorithm.

The first worker group will initialize parameters of the Net, and put them into the distributed memory/table. The virtual function TrainOneBatch and TestOneBatch implement the training and test algorithm for one mini-batch data.

Child workers override the two functions to implement their training algorithms, e.g., the BPWorker/CDWorker/BPTTWorker implements the BP/CD/BPTT algorithm respectively.

| void singa::Worker::InitLocalParams |

( |

| ) |

|

Init all local params (i.e., params from layers resident in this worker).

If the param is owned by the worker, then init it and put it to servers. Otherwise call Get() to get the param. The Get may not send get request. Because the param's own is in the same procs. Once the owner initializes the param, its version is visiable to all shares. If the training starts from scrath, the params are initialzed using random distributions, e.g., Gaussian distribution. After that, the worker may train for a couple of steps to warmup the params before put them to servers (warmup of JobProto controls this).

If the owner param is availabel from checkpoint file, then its values are parsed from the checkpoint file instead of randomly initialized. For params who do not have checkpoints, randomly init them.

1.8.6

1.8.6