UIMA Overview & SDK Setup

Version 2.4.0

Copyright © 2006, 2011 The Apache Software Foundation

Copyright © 2004, 2006 International Business Machines Corporation

License and Disclaimer. The ASF licenses this documentation to you under the Apache License, Version 2.0 (the "License"); you may not use this documentation except in compliance with the License. You may obtain a copy of the License at

Unless required by applicable law or agreed to in writing, this documentation and its contents are distributed under the License on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

Trademarks. All terms mentioned in the text that are known to be trademarks or service marks have been appropriately capitalized. Use of such terms in this book should not be regarded as affecting the validity of the the trademark or service mark.

November, 2011

Table of Contents

- 1. Overview

- 2. UIMA Conceptual Overview

- 3. Eclipse IDE setup for UIMA

- 4. UIMA FAQ's

- 5. Known Issues

- Glossary

Chapter 1. UIMA Overview

The Unstructured Information Management Architecture (UIMA) is an architecture and software framework for creating, discovering, composing and deploying a broad range of multi-modal analysis capabilities and integrating them with search technologies. The architecture is undergoing a standardization effort, referred to as the UIMA specification by a technical committee within OASIS.

The Apache UIMA framework is an Apache licensed, open source implementation of the UIMA Architecture, and provides a run-time environment in which developers can plug in and run their UIMA component implementations and with which they can build and deploy UIM applications. The framework itself is not specific to any IDE or platform.

It includes an all-Java implementation of the UIMA framework for the development, description, composition and deployment of UIMA components and applications. It also provides the developer with an Eclipse-based (http://www.eclipse.org/ ) development environment that includes a set of tools and utilities for using UIMA. It also includes a C++ version of the framework, and enablements for Annotators built in Perl, Python, and TCL.

This chapter is the intended starting point for readers that are new to the Apache UIMA Project. It includes this introduction and the following sections:

-

Section 1.1, “Apache UIMA Project Documentation Overview” provides a list of the books and topics included in the Apache UIMA documentation with a brief summary of each.

-

Section 1.2, “How to use the Documentation” describes a recommended path through the documentation to help get the reader up and running with UIMA

-

Section 1.4, “Migrating from IBM UIMA to Apache UIMA” is intended for users of IBM UIMA, and describes the steps needed to upgrade to Apache UIMA.

-

Section 1.3.2, “Changes from UIMA Version 1.x” lists the changes that occurred between UIMA v1.x and UIMA v2.x (independent of the transition to Apache).

The main website for Apache UIMA is http://uima.apache.org. Here you can find out many things, including:

how to download (both the binary and source distributions

how to participate in the development

mailing lists - including the user list used like a forum for questions and answers

a Wiki where you can find and contribute all kinds of information, including tips and best practices

a sandbox - a subproject for potential new additions to Apache UIMA or to subprojects of it. Things here are works in progress, and may (or may not) be included in releases.

links to conferences

1.1. Apache UIMA Project Documentation Overview

The user documentation for UIMA is organized into several parts.

-

Overviews - this documentation

-

Eclipse Tooling Installation and Setup - also in this document

-

Tutorials and Developer's Guides

-

Tools Users' Guides

-

References

The first 2 parts make up this book; the last 3 have individual books. The books are provided both as (somewhat large) html files, viewable in browsers, and also as PDF files. The documentation is fully hyperlinked, with tables of contents. The PDF versions are set up to print nicely - they have page numbers included on the cross references within a book.

If you view the PDF files inside a browser that supports imbedded viewing of PDF, the hyperlinks between different PDF books may work (not all browsers have been tested...).

The following set of tables gives a more detailed overview of the various parts of the documentation.

1.1.1. Overviews

| Overview of the Documentation |

What you are currently reading. Lists the documents provided in the Apache UIMA documentation set and provides a recommended path through the documentation for getting started using UIMA. It includes release notes and provides a brief high-level description of the different software modules included in the Apache UIMA Project. See Section 1.1, “Apache UIMA Project Documentation Overview”. |

| Conceptual Overview | Provides a broad conceptual overview of the UIMA component architecture; includes references to the other documents in the documentation set that provide more detail. See Chapter 2, UIMA Conceptual Overview |

| UIMA FAQs | Frequently Asked Questions about general UIMA concepts. (Not a programming resource.) See Chapter 4, UIMA Frequently Asked Questions (FAQ's). |

| Known Issues | Known issues and problems with the UIMA SDK. See Chapter 5, Known Issues. |

| Glossary | UIMA terms and concepts and their basic definitions. See Glossary. |

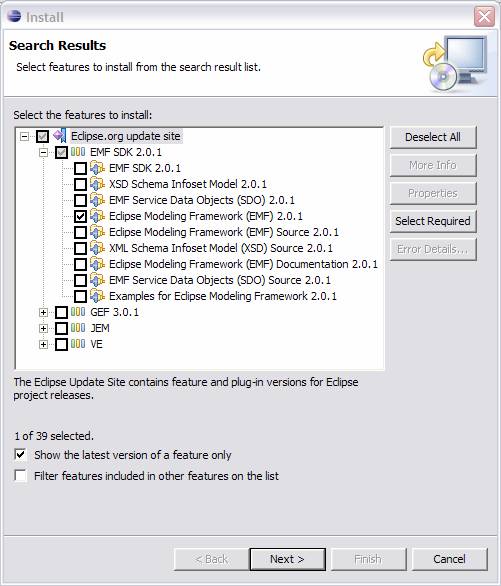

1.1.2. Eclipse Tooling Installation and Setup

Provides step-by-step instructions for installing Apache UIMA in the Eclipse Interactive Development Environment. See Chapter 3, Setting up the Eclipse IDE to work with UIMA.

1.1.3. Tutorials and Developer's Guides

| Annotators and Analysis Engines | Tutorial-style guide for building UIMA annotators and analysis engines. This chapter introduces the developer to creating type systems and using UIMA's common data structure, the CAS or Common Analysis Structure. It demonstrates how to use built in tools to specify and create basic UIMA analysis components. See Chapter 1, Annotator and Analysis Engine Developer's Guide. |

| Building UIMA Collection Processing Engines | Tutorial-style guide for building UIMA collection processing engines. These manage the analysis of collections of documents from source to sink. See Chapter 2, Collection Processing Engine Developer's Guide. |

| Developing Complete Applications | Tutorial-style guide on using the UIMA APIs to create, run and manage UIMA components from your application. Also describes APIs for saving and restoring the contents of a CAS using an XML format called XMI®. See Chapter 3, Application Developer's Guide. |

| Flow Controller | When multiple components are combined in an Aggregate, each CAS flow among the various components. UIMA provides two built-in flows, and also allows custom flows to be implemented. See Chapter 4, Flow Controller Developer's Guide. |

| Developing Applications using Multiple Subjects of Analysis | A single CAS maybe associated with multiple subjects of analysis (Sofas). These are useful for representing and analyzing different formats or translations of the same document. For multi-modal analysis, Sofas are good for different modal representations of the same stream (e.g., audio and close-captions).This chapter provides the developer details on how to use multiple Sofas in an application. See Chapter 5, Annotations, Artifacts, and Sofas. |

| Multiple CAS Views of an Artifact | UIMA provides an extension to the basic model of the CAS which supports analysis of multiple views of the same artifact, all contained with the CAS. This chapter describes the concepts, terminology, and the API and XML extensions that enable this. See Chapter 6, Multiple CAS Views of an Artifact. |

| CAS Multiplier | A component may add additional CASes into the workflow. This may be useful to break up a large artifact into smaller units, or to create a new CAS that collects information from multiple other CASes. See Chapter 7, CAS Multiplier Developer's Guide. |

| XMI and EMF Interoperability | The UIMA Type system and the contents of the CAS itself can be externalized using the XMI standard for XML MetaData. Eclipse Modeling Framework (EMF) tooling can be used to develop applications that use this information. See Chapter 8, XMI and EMF Interoperability. |

1.1.4. Tools Users' Guides

| Component Descriptor Editor | Describes the features of the Component Descriptor Editor Tool. This tool provides a GUI for specifying the details of UIMA component descriptors, including those for Analysis Engines (primitive and aggregate), Collection Readers, CAS Consumers and Type Systems. See Chapter 1, Component Descriptor Editor User's Guide. |

| Collection Processing Engine Configurator | Describes the User Interfaces and features of the CPE Configurator tool. This tool allows the user to select and configure the components of a Collection Processing Engine and then to run the engine. See Chapter 2, Collection Processing Engine Configurator User's Guide. |

| Pear Packager | Describes how to use the PEAR Packager utility. This utility enables developers to produce an archive file for an analysis engine that includes all required resources for installing that analysis engine in another UIMA environment. See Chapter 9, PEAR Packager User's Guide. |

| Pear Installer | Describes how to use the PEAR Installer utility. This utility installs and verifies an analysis engine from an archive file (PEAR) with all its resources in the right place so it is ready to run. See Chapter 11, PEAR Installer User's Guide. |

| Pear Merger | Describes how to use the Pear Merger utility, which does a simple merge of multiple PEAR packages into one. See Chapter 12, PEAR Merger User's Guide. |

| Document Analyzer | Describes the features of a tool for applying a UIMA analysis engine to a set of documents and viewing the results. See Chapter 3, Document Analyzer User's Guide. |

| CAS Visual Debugger | Describes the features of a tool for viewing the detailed structure and contents of a CAS. Good for debugging. See Chapter 5, CAS Visual Debugger. |

| JCasGen | Describes how to run the JCasGen utility, which automatically builds Java classes that correspond to a particular CAS Type System. See Chapter 8, JCasGen User's Guide. |

| XML CAS Viewer | Describes how to run the supplied viewer to view externalized XML forms of CASes. This viewer is used in the examples. See Chapter 4, Annotation Viewer. |

1.1.5. References

| Introduction to the UIMA API Javadocs | Javadocs detailing the UIMA programming interfaces See Chapter 1, Javadocs |

| XML: Component Descriptor | Provides detailed XML format for all the UIMA component descriptors, except the CPE (see next). See Chapter 2, Component Descriptor Reference. |

| XML: Collection Processing Engine Descriptor | Provides detailed XML format for the Collection Processing Engine descriptor. See Chapter 3, Collection Processing Engine Descriptor Reference |

| CAS | Provides detailed description of the principal CAS interface. See Chapter 4, CAS Reference |

| JCas | Provides details on the JCas, a native Java interface to the CAS. See Chapter 5, JCas Reference |

| PEAR Reference | Provides detailed description of the deployable archive format for UIMA components. See Chapter 6, PEAR Reference |

| XMI CAS Serialization Reference | Provides detailed description of the deployable archive format for UIMA components. See Chapter 7, XMI CAS Serialization Reference |

1.2. How to use the Documentation

-

Explore this chapter to get an overview of the different documents that are included with Apache UIMA.

-

Read Chapter 2, UIMA Conceptual Overview to get a broad view of the basic UIMA concepts and philosophy with reference to the other documents included in the documentation set which provide greater detail.

-

For more general information on the UIMA architecture and how it has been used, refer to the IBM Systems Journal special issue on Unstructured Information Management, on-line at http://www.research.ibm.com/journal/sj43-3.html or to the section of the UIMA project website on Apache website where other publications are listed.

-

Set up Apache UIMA in your Eclipse environment. To do this, follow the instructions in Chapter 3, Setting up the Eclipse IDE to work with UIMA.

-

Develop sample UIMA annotators, run them and explore the results. Read Chapter 1, Annotator and Analysis Engine Developer's Guide and follow it like a tutorial to learn how to develop your first UIMA annotator and set up and run your first UIMA analysis engines.

-

As part of this you will use a few tools including

-

The UIMA Component Descriptor Editor, described in more detail in Chapter 1, Component Descriptor Editor User's Guide and

-

The Document Analyzer, described in more detail in Chapter 3, Document Analyzer User's Guide.

-

-

While following along in Chapter 1, Annotator and Analysis Engine Developer's Guide, reference documents that may help are:

-

Chapter 2, Component Descriptor Reference for understanding the analysis engine descriptors

-

Chapter 5, JCas Reference for understanding the JCas

-

-

-

Learn how to create, run and manage a UIMA analysis engine as part of an application. Connect your analysis engine to the provided semantic search engine to learn how a complete analysis and search application may be built with Apache UIMA. Chapter 3, Application Developer's Guide will guide you through this process.

-

As part of this you will use the document analyzer (described in more detail in Chapter 3, Document Analyzer User's Guide and semantic search GUI tools (see Section 3.5.2, “Semantic Search Query Tool”.

-

-

Pat yourself on the back. Congratulations! If you reached this step successfully, then you have an appreciation for the UIMA analysis engine architecture. You would have built a few sample annotators, deployed UIMA analysis engines to analyze a few documents, searched over the results using the built-in semantic search engine and viewed the results through a built-in viewer – all as part of a simple but complete application.

-

Develop and run a Collection Processing Engine (CPE) to analyze and gather the results of an entire collection of documents. Chapter 2, Collection Processing Engine Developer's Guide will guide you through this process.

-

As part of this you will use the CPE Configurator tool. For details see Chapter 2, Collection Processing Engine Configurator User's Guide.

-

You will also learn about CPE Descriptors. The detailed format for these may be found in Chapter 3, Collection Processing Engine Descriptor Reference.

-

-

Learn how to package up an analysis engine for easy installation into another UIMA environment. Chapter 9, PEAR Packager User's Guide and Chapter 11, PEAR Installer User's Guide will teach you how to create UIMA analysis engine archives so that you can easily share your components with a broader community.

1.3. Changes from Previous Major Versions

There are two previous version of UIMA, available from IBM's alphaWorks: version 1.4.x and version 2.0 (the 2.0 version was a "beta" only release). This section describes the changes relative to both of these releases. A migration utility is provided which updates your Java code and descriptors as needed for this release. See Section 1.4, “Migrating from IBM UIMA to Apache UIMA” for instructions on how to run the migration utility.

Note

Each Apache UIMA release includes RELEASE_NOTES and RELEASE_NOTES.html files that describe the changes that have occurred in each release. Please refer to those files for specific changes for each Apache UIMA release.

1.3.1. Changes from IBM UIMA 2.0 to Apache UIMA 2.1

This section describes what has changed between version 2.0 and version 2.1 of UIMA; the following section describes the differences between version 1.4 and version 2.1.

1.3.1.1. Java Package Name Changes

All of the UIMA Java package names have changed in Apache UIMA. They now start with

org.apache rather than com.ibm. There have been other

changes as well. The package name segment reference_impl has been shortened to

impl, and some segments have been reordered. For example

com.ibm.uima.reference_impl.analysis_engine has become

org.apache.uima.analysis_engine.impl. Tools are now consolidated under

org.apache.uima.tools and service adapters under

org.apache.uima.adapter.

The migration utility will replace all occurrences of IBM UIMA package names with their Apache UIMA

equivalents. It will not replace prefixes of package names, so if your code uses

a package called com.ibm.uima.myproject (although that is not recommended), it

will not be replaced.

1.3.1.2. XML Descriptor Changes

The XML namespace in UIMA component descriptors has changed from

http://uima.watson.ibm.com/resourceSpecifier to

http://uima.apache.org/resourceSpecifier. The value of the

<frameworkImplementation> must now be

org.apache.uima.java or org.apache.uima.cpp. The

migration script will apply these replacements.

1.3.1.3. TCAS replaced by CAS

In Apache UIMA the TCAS interface has been removed. All uses of it must now be

replaced by the CAS interface. (All methods that used to be defined on

TCAS were moved to CAS in v2.0.) The method

CAS.getTCAS() is replaced with CAS.getCurrentView() and

CAS.getTCAS(String) is replaced with CAS.getView(String)

. The following have also been removed and replaced with the equivalent "CAS" variants:

TCASException, TCASRuntimeException,

TCasPool, and CasCreationUtils.createTCas(...).

The migration script will apply the necessary replacements.

1.3.1.4. JCas Is Now an Interface

In previous versions, user code accessed the JCas class directly. In Apache

UIMA there is now an interface, org.apache.uima.jcas.JCas, which all JCas-based

user code must now use. Static methods that were previously on the JCas class (and called from JCas cover

classes generated by JCasGen) have been moved to the new

org.apache.uima.jcas.JCasRegistry class. The migration script will apply the

necessary replacements to your code, including any JCas cover classes that are part of your codebase.

1.3.1.5. JAR File names Have Changed

The UIMA JAR file names have changed slightly. Underscores have been replaced with hyphens to

be consistent with Apache naming conventions. For example uima_core.jar is now

uima-core.jar. Also uima_jcas_builtin_types.jar has been

renamed to uima-document-annotation.jar. Finally, the jVinci.jar

file is now in the lib directory rather than the lib/vinci

directory as was previously the case. The migration script will apply the necessary replacements,

for example to script files or Eclipse launch configurations. (See Section 1.4.1, “Running the Migration Utility” for a list of file extensions that

the migration utility will process by default.)

1.3.1.6. Semantic Search Engine Repackaged

The versions of the UIMA SDK prior to the move into Apache came with a semantic search engine. The Apache version does not include this search engine. The search engine has been repackaged and is separately available from http://www.alphaworks.ibm.com/tech/uima. The intent is to hook up (over time) with other open source search engines, such as the Lucene search engine project in Apache.

1.3.2. Changes from UIMA Version 1.x

Version 2.x of UIMA provides new capabilities and refines several areas of the UIMA architecture, as compared with version 1.

1.3.2.1. New Capabilities

New Primitive data types. UIMA now supports Boolean (bit), Byte, Short (16 bit integers), Long (64 bit integers), and Double (64 bit floating point) primitive types, and arrays of these. These types can be used like all the other primitive types.

Simpler Analysis Engines and CASes. Version 1.x made a distinction between Analysis Engines and Text Analysis Engines. This distinction has been eliminated in Version 2 - new code should just refer to Analysis Engines. Analysis Engines can operate on multiple kinds of artifacts, including text.

Sofas and CAS Views simplified. The APIs for manipulating multiple subjects of analysis (Sofas) and their corresponding CAS Views have been simplified.

Analysis Component generalized to support multiple new CAS outputs. Analysis Components, in general, can make use of new capabilities to return multiple new CASes, in addition to returning the original CAS that is passed in. This allows components to have Collection Reader-like capabilities, but be placed anywhere in the flow. See Chapter 7, CAS Multiplier Developer's Guide .

User-customized Flow Controllers. A new component, the Flow Controller, can be supplied by the user to implement arbitrary flow control for CASes within an Aggregate. This is in addition to the two built-in flow control choices of linear and language-capability flow. See Chapter 4, Flow Controller Developer's Guide .

1.3.2.2. Other Changes

New additional Annotator API ImplBase. As of version 2.1, UIMA has a new set of Annotator interfaces. Annotators should now extend CasAnnotator_ImplBase or JCasAnnotator_ImplBase instead of the v1.x TextAnnotator_ImplBase and JTextAnnotator_ImplBase. The v1.x annotator interfaces are unchanged and are still supported for backwards compatibility.

The new Annotator interfaces support the changed approaches for ResultSpecifications and the changed exception names (see below), and have all the methods that CAS Consumers have, including CollectionProcessComplete and BatchProcessComplete.

UIMA Exceptions rationalized. In version 1 there were different exceptions for the methods of an AnalysisEngine and for the corresponding methods of an Annotator; these were merged in version 2.

AnnotatorProcessException (v1) → AnalysisEngineProcessException (v2)

AnnotatorInitializationException (v1) → ResourceInitializationException (v2)

AnnotatorConfigurationException (v1) → ResourceConfigurationException (v2)

AnnotatorContextException (v1) → ResourceAccessException (v2)

The previous exceptions are still available, but new code should use the new exceptions.

Note

The signature for typeSystemInit changed the “throws” clause to throw AnalysisEngineProcessException. For Annotators that extend the previous base, the previous definition of typeSystemInit will continue to work for backwards compatibility.

Changes in Result Specifications.

In version 1, the process(...) method took a second

argument, a ResultSpecification. Now it is set when changed and it's up to the

annotator to store it in a local field and make it available when needed.

This approach lets the annotator receive a specific signal (a method call) when

the Result Specification changes. Previously, it would need to check on every call to

see if it changed. The default impl base classes provide set/getResultSpecification(...)

methods for this

Only one Capability Set. In version one, you can define multiple capability sets. These were not supported well, and for version two, this is now simplified - you should only use one capability set. (For backwards compatibility, if you use more, this won't cause a problem for now).

TextAnalysisEngine deprecated; use AnalysisEngine instead. TextAnalysisEngine has been deprecated - it is now no different than AnalysisEngine. Previous code that uses this should still continue to work, however.

Annotator Context deprecated; use UimaContext instead. The context for the Annotator is the same as the overall UIMA context. The impl base classes provide a getContext() method which returns now the UimaContext object.

DocumentAnalyzer tool uses XMI formats. The DocumentAnalyzer tool saves outputs in the new XMI serialization format. The AnnotationViewer and SemanticSearchGUI tools can read both the new XMI format and the previous XCAS format.

CAS Initializer deprecated. Example code that used CAS Initializers has been rewritten to not use this.

1.3.2.3. Backwards Compatibility

Other than the changes from IBM UIMA to Apache UIMA described above, most UIMA 1.x applications should not require additional changes to upgrade to UIMA 2.x. However, there are a few exceptions that UIMA 1.x users may need to be aware of:

-

There have been some changes to ResultSpecifications. We do not guarantee 100% backwards compatibility for applications that made use of them, although most cases should work.

-

For applications that deal with multiple subjects of analysis (Sofas), the rules that determine whether a component is Multi-View or Single-View have been made more consistent. A component is considered Multi-View if and only if it declares at least one inputSofa or outputSofa in its descriptor. This leads to the following incompatibilities in unusual cases:

-

It is an error if an annotator that implements the TextAnnotator or JTextAnnotator interface also declares inputSofas or outputSofas in its descriptor. Such annotators must be Single-View.

-

Annotators that implement GenericAnnotator but do not declare any inputSofas or outputSofas will now be passed the view of default Sofa instead of the Base CAS.

-

1.4. Migrating from IBM UIMA to Apache UIMA

In Apache UIMA, several things have changed that require changes to user code and descriptors.

A migration utility is provided which will make the required updates to your files. The most

significant change is that the Java package names for all of the UIMA classes and interfaces have changed

from what they were in IBM UIMA; all of the package names now start with the prefix org.apache.

1.4.1. Running the Migration Utility

Note

Before running the migration utility, be sure to back up your files, just in case you encounter any problems, because the migration tool updates the files in place in the directories where it finds them.

The migration utility is run by executing the script file

apache-uima/bin/ibmUimaToApacheUima.bat (Windows) or

apache-uima/bin/ibmUimaToApacheUima.sh (UNIX). You must pass one argument: the

directory containing the files that you want to be migrated. Subdirectories will be processed

recursively.

The script scans your files and applies the necessary updates, for example replacing the com.ibm package names with the new org.apache package names. For more details on what has changed in the UIMA APIs and what changes are performed by the migration script, see Section 1.3.1, “Changes from IBM UIMA 2.0 to Apache UIMA 2.1”.

The script will only attempt to modify files with the extensions: java, xml, xmi, wsdd, properties,

launch, bat, cmd, sh, ksh, or csh; and files with no extension. Also, files with size greater than 1,000,000

bytes will be skipped. (If you want the script to modify files with other extensions, you can edit the script

file and change the -ext argument appropriately.)

If the migration tool reports warnings, there may be a few additional steps to take. The following two sections explain some simple manual changes that you might need to make to your code.

1.4.1.1. JCas Cover Classes for DocumentAnnotation

If you have run JCasGen it is likely that you have the classes

com.ibm.uima.jcas.tcas.DocumentAnnotation and

com.ibm.uima.jcas.tcas.DocumentAnnotation_Type as part of your code. This

package name is no longer valid, and the migration utility does not move your files between directories so

it is unable to fix this.

If you have not made manual modifications to these classes, the best solution is usually to just delete

these two classes (and their containing package). There is a default version in the

uima-document-annotation.jar file that is included in Apache UIMA. If you

have made custom changes, then you should not delete the file but instead move it to

the correct package org.apache.uima.jcas.tcas. For more information about JCas

and DocumentAnnotation please see Section 5.5.4, “Adding Features to DocumentAnnotation”

1.4.1.2. JCas.getDocumentAnnotation

The deprecated method JCas.getDocumentAnnotation has been removed. Its use

must be replaced with JCas.getDocumentAnnotationFs. The method

JCas.getDocumentAnnotationFs() returns type TOP, so your

code must cast this to type DocumentAnnotation. The reasons for this are described

in Section 5.5.4, “Adding Features to DocumentAnnotation”.

1.4.2. Manual Migration

The following are rare cases where you may need to take additional steps to migrate your code. You need only read this section if the migration tool reported a warning or if you are having trouble getting your code to compile or run after running the migration. For most users, attention to these things will not be required.

1.4.2.1. xi:include

The use of <xi:include> in UIMA component descriptors has been discouraged for some time, and in Apache UIMA support for it has been removed. If you have descriptors that use that, you must change them to use UIMA's <import> syntax instead. The proper syntax is described in Section 2.2, “Imports”.

1.4.2.2. Duplicate Methods Taking CAS and TCAS as Arguments

Because TCAS has been replaced by CAS, if you had two

methods distinguished only by whether an argument type was TCAS or

CAS, the migration tool will cause these to have identical signatures, which will be

a compile error. If this happens, consider why the two variants were needed in the first place. Often, it may

work to simply delete one of the methods.

1.4.2.3. Use of Undocumented Methods from the com.ibm.uima.util package

Previous UIMA versions has some methods in the com.ibm.uima.util package that

were for internal use and were not documented in the Javadoc. (There are also many methods in that package

which are documented, and there is no issue with using these.) It is not recommended that you use any of the

undocumented methods. If you do, the migration script will not handle them correctly. These have now been

moved to org.apache.uima.internal.util, and you will have to manually update your

imports to point to this location.

1.4.2.4. Use of UIMA Package Names for User Code

If you have placed your own classes in a package that has exactly the same name as one of the UIMA packages (not recommended), this will cause problems when your run the migration script. Since the script replaces UIMA package names, all of your imports that refer to your class will get replaced and your code will no longer compile. If this happens, you can fix it by manually moving your code to the new Apache UIMA package name (i.e., whatever name your imports got replaced with). However, we recommend instead that you do not use Apache UIMA package names for your own code.

An even more rare case would be if you had a package name that started with a capital letter (poor Java

style) AND was prefixed by one of the UIMA package names, for example a package named

com.ibm.uima.MyPackage. This would be treated as a class name and replaced with

org.apache.uima.MyPackage wherever it occurs.

1.4.2.5. CASException and CASRuntimeException now extend UIMA(Runtime)Exception

This change may affect user code to a small extent, as some of the APIs on

CASException and CASRuntimeException no longer exist.

On the up side, all UIMA exceptions are now derived from the same base classes and behave

the same way. The most significant change is that you can no longer check for the specific

type of exception the way you used to. For example, if you had code like this:

catch (CASRuntimeException e) {

if (e.getError() == CASRuntimeException.ILLEGAL_ARRAY_SIZE) {

// Do something in case this particular error is caughtyou will need to replace it with the following:

catch (CASRuntimeException e) {

if (e.getMessageKey().equals(CASRuntimeException.ILLEGAL_ARRAY_SIZE)) {

// Do something in case this particular error is caughtas the message keys are now strings. This change is not handled by the migration script.

1.5. Apache UIMA Summary

1.5.1. General

UIMA supports the development, discovery, composition and deployment of multi-modal analytics for the analysis of unstructured information and its integration with search technologies.

Apache UIMA includes APIs and tools for creating analysis components. Examples of analysis components include tokenizers, summarizers, categorizers, parsers, named-entity detectors etc. Tutorial examples are provided with Apache UIMA; additional components are available from the community.

Apache UIMA does not itself include a semantic search engine; instructions are included for incorporating the semantic search SDK from IBM's alphaWorks which can index the results of analysis and for using this semantic index to perform more advanced search.

1.5.2. Programming Language Support

UIMA supports the development and integration of analysis algorithms developed in different programming languages.

The Apache UIMA project is both a Java framework and a matching C++ enablement layer, which allows annotators to be written in C++ and have access to a C++ version of the CAS. The C++ enablement layer also enables annotators to be written in Perl, Python, and TCL, and to interoperate with those written in other languages.

1.5.3. Multi-Modal Support

The UIMA architecture supports the development, discovery, composition and deployment of multi-modal analytics, including text, audio and video. Chapter 5, Annotations, Artifacts, and Sofas discuss this is more detail.

1.5.4. Semantic Search Components

The Lucene search engine as of this writing (November, 2006) does not support searching with annotations. The site http://www.alphaworks.ibm.com/tech/uima provides a download of a semantic search engine, a simple demo query tool, some documentation on the semantic search engine, and a component that connects the results of UIMA analysis to the indexer so that the annotations as well as key-words can be indexed.

Previous versions of the UIMA SDK (prior to the Apache versions) are available from IBM's alphaWorks. The source code for previous versions of the main UIMA framework is available on SourceForge.

1.6. Summary of Apache UIMA Capabilities

| Module | Description |

| UIMA Framework Core |

A framework integrating core functions for creating, deploying, running and managing UIMA components, including analysis engines and Collection Processing Engines in collocated and/or distributed configurations. The framework includes an implementation of core components for transport layer adaptation, CAS management, workflow management based on declarative specifications, resource management, configuration management, logging, and other functions. |

| C++ and other programming language Interoperability |

Includes C++ CAS and supports the creation of UIMA compliant C++ components that can be deployed in the UIMA run-time through a built-in JNI adapter. This includes high-speed binary serialization. Includes support for creating service-based UIMA engines. This is ideal for wrapping existing code written in different languages. |

| Framework Services and APIs | Note that interfaces of these components are available to the developer but different implementations are possible in different implementations of the UIMA framework. |

| CAS | These classes provide the developer with typed access to the Common Analysis Structure (CAS), including type system schema, elements, subjects of analysis and indices. Multiple subjects of analysis (Sofas) mechanism supports the independent or simultaneous analysis of multiple views of the same artifacts (e.g. documents), supporting multi-lingual and multi-modal analysis. |

| JCas | An alternative interface to the CAS, providing Java-based UIMA Analysis components with native Java object access to CAS types and their attributes or features, using the JavaBeans conventions of getters and setters. |

| Collection Processing Management (CPM) | Core functions for running UIMA collection processing engines in collocated and/or distributed configurations. The CPM provides scalability across parallel processing pipelines, check-pointing, performance monitoring and recoverability. |

| Resource Manager | Provides UIMA components with run-time access to external resources handling capabilities such as resource naming, sharing, and caching. |

| Configuration Manager | Provides UIMA components with run-time access to their configuration parameter settings. |

| Logger | Provides access to a common logging facility. |

| Tools and Utilities | |

| JCasGen | Utility for generating a Java object model for CAS types from a UIMA XML type system definition. |

| Saving and Restoring CAS contents | APIs in the core framework support saving and restoring the contents of a CAS to streams using an XMI format. |

| PEAR Packager for Eclipse | Tool for building a UIMA component archive to facilitate porting, registering, installing and testing components. |

| PEAR Installer | Tool for installing and verifying a UIMA component archive in a UIMA installation. |

| PEAR Merger | Utility that combines multiple PEARs into one. |

| Component Descriptor Editor | Eclipse Plug-in for specifying and configuring component descriptors for UIMA analysis engines as well as other UIMA component types including Collection Readers and CAS Consumers. |

| CPE Configurator | Graphical tool for configuring Collection Processing Engines and applying them to collections of documents. |

| Java Annotation Viewer | Viewer for exploring annotations and related CAS data. |

| CAS Visual Debugger | GUI Java application that provides developers with detailed visual view of the contents of a CAS. |

| Document Analyzer | GUI Java application that applies analysis engines to sets of documents and shows results in a viewer. |

| Example Analysis Components | |

| Database Writer | CAS Consumer that writes the content of selected CAS types into a relational database, using JDBC. This code is in cpe/PersonTitleDBWriterCasConsumer. |

| Annotators | Set of simple annotators meant for pedagogical purposes. Includes: Date/time, Room-number, Regular expression, Tokenizer, and Meeting-finder annotator. There are sample CAS Multipliers as well. |

| Flow Controllers | There is a sample flow-controller based on the whiteboard concept of sending the CAS to whatever annotator hasn't yet processed it, when that annotator's inputs are available in the CAS. |

| XMI Collection Reader, CAS Consumer | Reads and writes the CAS in the XMI format |

| File System Collection Reader | Simple Collection Reader for pulling documents from the file system and initializing CASes. |

| Components available from www.alphaworks.ibm.com/tech/uima | |

| Semantic Search CAS Indexer | A CAS Consumer that uses the semantic search engine indexer to build an index from a stream of CASes. Requires the semantic search engine (available from the same place). |

Chapter 2. UIMA Conceptual Overview

UIMA is an open, industrial-strength, scaleable and extensible platform for creating, integrating and deploying unstructured information management solutions from powerful text or multi-modal analysis and search components.

The Apache UIMA project is an implementation of the Java UIMA framework available under the Apache License, providing a common foundation for industry and academia to collaborate and accelerate the world-wide development of technologies critical for discovering vital knowledge present in the fastest growing sources of information today.

This chapter presents an introduction to many essential UIMA concepts. It is meant to provide a broad overview to give the reader a quick sense of UIMA's basic architectural philosophy and the UIMA SDK's capabilities.

This chapter provides a general orientation to UIMA and makes liberal reference to the other chapters in the UIMA SDK documentation set, where the reader may find detailed treatments of key concepts and development practices. It may be useful to refer to Glossary, to become familiar with the terminology in this overview.

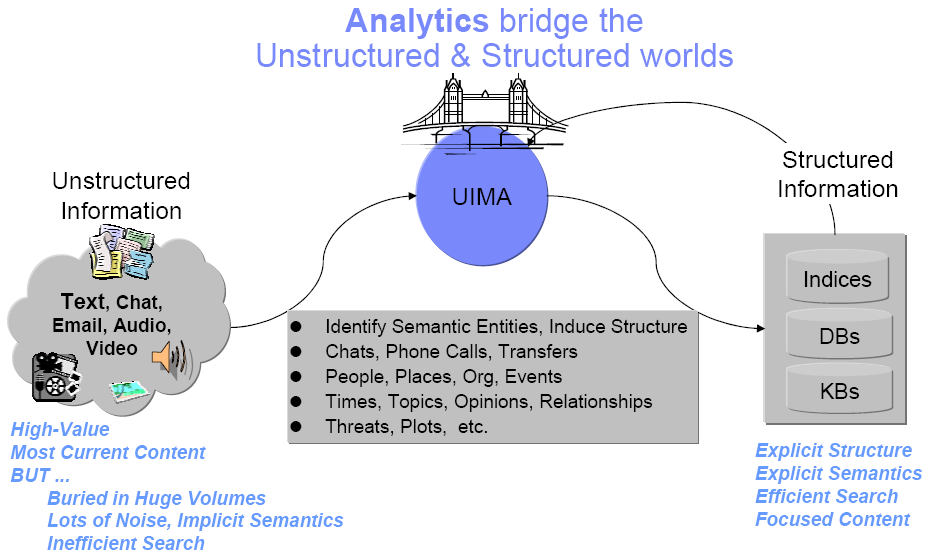

2.1. UIMA Introduction

Unstructured information represents the largest, most current and fastest growing source of information available to businesses and governments. The web is just the tip of the iceberg. Consider the mounds of information hosted in the enterprise and around the world and across different media including text, voice and video. The high-value content in these vast collections of unstructured information is, unfortunately, buried in lots of noise. Searching for what you need or doing sophisticated data mining over unstructured information sources presents new challenges.

An unstructured information management (UIM) application may be generally characterized as a software system that analyzes large volumes of unstructured information (text, audio, video, images, etc.) to discover, organize and deliver relevant knowledge to the client or application end-user. An example is an application that processes millions of medical abstracts to discover critical drug interactions. Another example is an application that processes tens of millions of documents to discover key evidence indicating probable competitive threats.

First and foremost, the unstructured data must be analyzed to interpret, detect and locate concepts of interest, for example, named entities like persons, organizations, locations, facilities, products etc., that are not explicitly tagged or annotated in the original artifact. More challenging analytics may detect things like opinions, complaints, threats or facts. And then there are relations, for example, located in, finances, supports, purchases, repairs etc. The list of concepts important for applications to discover in unstructured content is large, varied and often domain specific. Many different component analytics may solve different parts of the overall analysis task. These component analytics must interoperate and must be easily combined to facilitate the developed of UIM applications.

The result of analysis are used to populate structured forms so that conventional data processing and search technologies like search engines, database engines or OLAP (On-Line Analytical Processing, or Data Mining) engines can efficiently deliver the newly discovered content in response to the client requests or queries.

In analyzing unstructured content, UIM applications make use of a variety of analysis technologies including:

Statistical and rule-based Natural Language Processing (NLP)

Information Retrieval (IR)

Machine learning

Ontologies

Automated reasoning and

Knowledge Sources (e.g., CYC, WordNet, FrameNet, etc.)

Specific analysis capabilities using these technologies are developed independently using different techniques, interfaces and platforms.

The bridge from the unstructured world to the structured world is built through the composition and deployment of these analysis capabilities. This integration is often a costly challenge.

The Unstructured Information Management Architecture (UIMA) is an architecture and software framework that helps you build that bridge. It supports creating, discovering, composing and deploying a broad range of analysis capabilities and linking them to structured information services.

UIMA allows development teams to match the right skills with the right parts of a solution and helps enable rapid integration across technologies and platforms using a variety of different deployment options. These ranging from tightly-coupled deployments for high-performance, single-machine, embedded solutions to parallel and fully distributed deployments for highly flexible and scaleable solutions.

2.2. The Architecture, the Framework and the SDK

UIMA is a software architecture which specifies component interfaces, data representations, design patterns and development roles for creating, describing, discovering, composing and deploying multi-modal analysis capabilities.

The UIMA framework provides a run-time environment in which developers can plug in their UIMA component implementations and with which they can build and deploy UIM applications. The framework is not specific to any IDE or platform. Apache hosts a Java and (soon) a C++ implementation of the UIMA Framework.

The UIMA Software Development Kit (SDK) includes the UIMA framework, plus tools and utilities for using UIMA. Some of the tooling supports an Eclipse-based ( http://www.eclipse.org/) development environment.

2.3. Analysis Basics

Key UIMA Concepts Introduced in this Section:

Analysis Engine, Document, Annotator, Annotator Developer, Type, Type System, Feature, Annotation, CAS, Sofa, JCas, UIMA Context.

2.3.1. Analysis Engines, Annotators & Results

UIMA is an architecture in which basic building blocks called Analysis Engines (AEs) are composed to analyze a document and infer and record descriptive attributes about the document as a whole, and/or about regions therein. This descriptive information, produced by AEs is referred to generally as analysis results. Analysis results typically represent meta-data about the document content. One way to think about AEs is as software agents that automatically discover and record meta-data about original content.

UIMA supports the analysis of different modalities including text, audio and video. The majority of examples we provide are for text. We use the term document, therefore, to generally refer to any unit of content that an AE may process, whether it is a text document or a segment of audio, for example. See the section Chapter 6, Multiple CAS Views of an Artifact for more information on multimodal processing in UIMA.

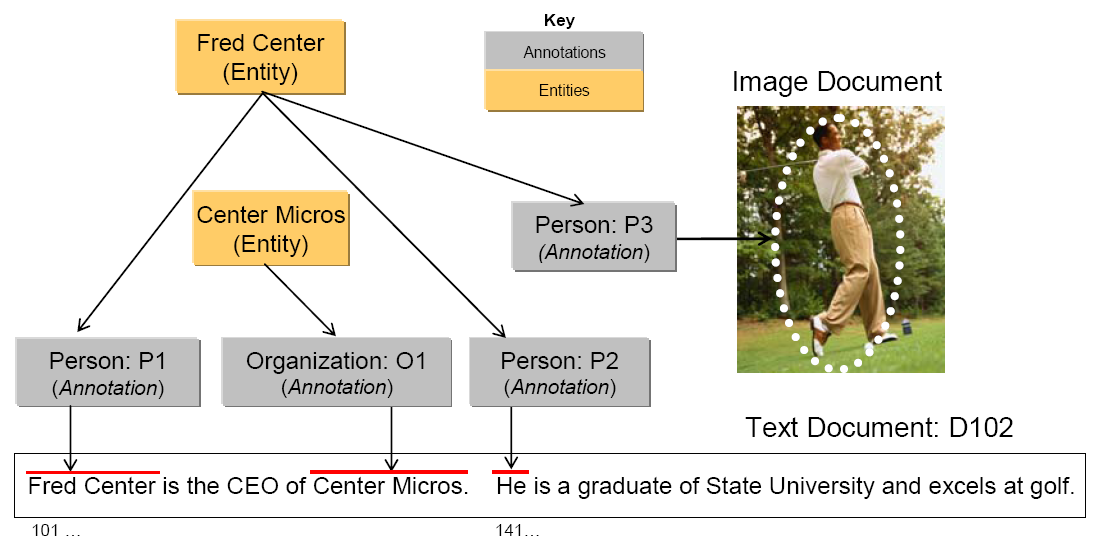

Analysis results include different statements about the content of a document. For example, the following is an assertion about the topic of a document:

(1) The Topic of document D102 is "CEOs and Golf".

Analysis results may include statements describing regions more granular than the entire document. We use the term span to refer to a sequence of characters in a text document. Consider that a document with the identifier D102 contains a span, “Fred Centers” starting at character position 101. An AE that can detect persons in text may represent the following statement as an analysis result:

(2) The span from position 101 to 112 in document D102 denotes a Person

In both statements 1 and 2 above there is a special pre-defined term or what we call in UIMA a Type. They are Topic and Person respectively. UIMA types characterize the kinds of results that an AE may create – more on types later.

Other analysis results may relate two statements. For example, an AE might record in its results that two spans are both referring to the same person:

(3) The Person denoted by span 101 to 112 and the Person denoted by span 141 to 143 in document D102 refer to the same Entity.

The above statements are some examples of the kinds of results that AEs may record to describe the content of the documents they analyze. These are not meant to indicate the form or syntax with which these results are captured in UIMA – more on that later in this overview.

The UIMA framework treats Analysis engines as pluggable, composible, discoverable, managed objects. At the heart of AEs are the analysis algorithms that do all the work to analyze documents and record analysis results.

UIMA provides a basic component type intended to house the core analysis algorithms running inside AEs. Instances of this component are called Annotators. The analysis algorithm developer's primary concern therefore is the development of annotators. The UIMA framework provides the necessary methods for taking annotators and creating analysis engines.

In UIMA the person who codes analysis algorithms takes on the role of the Annotator Developer. Chapter 1, Annotator and Analysis Engine Developer's Guide will take the reader through the details involved in creating UIMA annotators and analysis engines.

At the most primitive level an AE wraps an annotator adding the necessary APIs and infrastructure for the composition and deployment of annotators within the UIMA framework. The simplest AE contains exactly one annotator at its core. Complex AEs may contain a collection of other AEs each potentially containing within them other AEs.

2.3.2. Representing Analysis Results in the CAS

How annotators represent and share their results is an important part of the UIMA architecture. UIMA defines a Common Analysis Structure (CAS) precisely for these purposes.

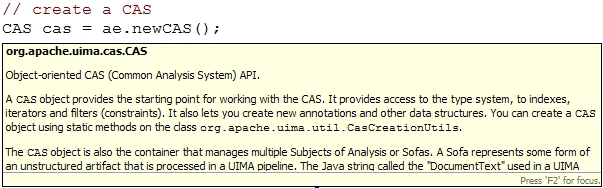

The CAS is an object-based data structure that allows the representation of objects, properties and values. Object types may be related to each other in a single-inheritance hierarchy. The CAS logically (if not physically) contains the document being analyzed. Analysis developers share and record their analysis results in terms of an object model within the CAS. [1]

The UIMA framework includes an implementation and interfaces to the CAS. For a more detailed description of the CAS and its interfaces see Chapter 4, CAS Reference.

A CAS that logically contains statement 2 (repeated here for your convenience)

(2) The span from position 101 to 112 in document D102 denotes a Person

would include objects of the Person type. For each person found in the body of a document, the AE would create a Person object in the CAS and link it to the span of text where the person was mentioned in the document.

While the CAS is a general purpose data structure, UIMA defines a few basic types and affords the developer the ability to extend these to define an arbitrarily rich Type System. You can think of a type system as an object schema for the CAS.

A type system defines the various types of objects that may be discovered in documents by AE's that subscribe to that type system.

As suggested above, Person may be defined as a type. Types have properties or features. So for example, Age and Occupation may be defined as features of the Person type.

Other types might be Organization, Company, Bank, Facility, Money, Size, Price, Phone Number, Phone Call, Relation, Network Packet, Product, Noun Phrase, Verb, Color, Parse Node, Feature Weight Array etc.

There are no limits to the different types that may be defined in a type system. A type system is domain and application specific.

Types in a UIMA type system may be organized into a taxonomy. For example, Company may be defined as a subtype of Organization. NounPhrase may be a subtype of a ParseNode.

2.3.2.1. The Annotation Type

A general and common type used in artifact analysis and from which additional types are often derived is the annotation type.

The annotation type is used to annotate or label regions of an artifact. Common artifacts are text documents, but they can be other things, such as audio streams. The annotation type for text includes two features, namely begin and end. Values of these features represent integer offsets in the artifact and delimit a span. Any particular annotation object identifies the span it annotates with the begin and end features.

The key idea here is that the annotation type is used to identify and label or “annotate” a specific region of an artifact.

Consider that the Person type is defined as a subtype of annotation. An annotator, for example, can create a Person annotation to record the discovery of a mention of a person between position 141 and 143 in document D102. The annotator can create another person annotation to record the detection of a mention of a person in the span between positions 101 and 112.

2.3.2.2. Not Just Annotations

While the annotation type is a useful type for annotating regions of a document, annotations are not the only kind of types in a CAS. A CAS is a general representation scheme and may store arbitrary data structures to represent the analysis of documents.

As an example, consider statement 3 above (repeated here for your convenience).

(3) The Person denoted by span 101 to 112 and the Person denoted by span 141 to 143 in document D102 refer to the same Entity.

This statement mentions two person annotations in the CAS; the first, call it P1 delimiting the span from 101 to 112 and the other, call it P2, delimiting the span from 141 to 143. Statement 3 asserts explicitly that these two spans refer to the same entity. This means that while there are two expressions in the text represented by the annotations P1 and P2, each refers to one and the same person.

The Entity type may be introduced into a type system to capture this kind of information. The Entity type is not an annotation. It is intended to represent an object in the domain which may be referred to by different expressions (or mentions) occurring multiple times within a document (or across documents within a collection of documents). The Entity type has a feature named occurrences. This feature is used to point to all the annotations believed to label mentions of the same entity.

Consider that the spans annotated by P1 and P2 were “Fred

Center” and “He” respectively. The annotator might create

a new Entity object called

FredCenter. To represent the relationship in statement 3 above,

the annotator may link FredCenter to both P1 and P2 by making them values of its

occurrences feature.

Figure 2.2, “Objects represented in the Common Analysis Structure (CAS)” also illustrates that an entity may be linked to annotations referring to regions of image documents as well. To do this the annotation type would have to be extended with the appropriate features to point to regions of an image.

2.3.2.3. Multiple Views within a CAS

UIMA supports the simultaneous analysis of multiple views of a document. This support comes in handy for processing multiple forms of the artifact, for example, the audio and the closed captioned views of a single speech stream, or the tagged and detagged views of an HTML document.

AEs analyze one or more views of a document. Each view contains a specific subject of analysis(Sofa), plus a set of indexes holding metadata indexed by that view. The CAS, overall, holds one or more CAS Views, plus the descriptive objects that represent the analysis results for each.

Another common example of using CAS Views is for different translations of a document. Each translation may be represented with a different CAS View. Each translation may be described by a different set of analysis results. For more details on CAS Views and Sofas see Chapter 6, Multiple CAS Views of an Artifact and Chapter 5, Annotations, Artifacts, and Sofas.

2.3.3. Interacting with the CAS and External Resources

The two main interfaces that a UIMA component developer interacts with are the CAS and the UIMA Context.

UIMA provides an efficient implementation of the CAS with multiple programming interfaces. Through these interfaces, the annotator developer interacts with the document and reads and writes analysis results. The CAS interfaces provide a suite of access methods that allow the developer to obtain indexed iterators to the different objects in the CAS. See Chapter 4, CAS Reference. While many objects may exist in a CAS, the annotator developer can obtain a specialized iterator to all Person objects associated with a particular view, for example.

For Java annotator developers, UIMA provides the JCas. This interface provides the Java developer with a natural interface to CAS objects. Each type declared in the type system appears as a Java Class; the UIMA framework renders the Person type as a Person class in Java. As the analysis algorithm detects mentions of persons in the documents, it can create Person objects in the CAS. For more details on how to interact with the CAS using this interface, refer to Chapter 5, JCas Reference.

The component developer, in addition to interacting with the CAS, can access external resources through the framework's resource manager interface called the UIMA Context. This interface, among other things, can ensure that different annotators working together in an aggregate flow may share the same instance of an external file or remote resource accessed via its URL, for example. For details on using the UIMA Context see Chapter 1, Annotator and Analysis Engine Developer's Guide.

2.3.4. Component Descriptors

UIMA defines interfaces for a small set of core components that users of the framework provide implmentations for. Annotators and Analysis Engines are two of the basic building blocks specified by the architecture. Developers implement them to build and compose analysis capabilities and ultimately applications.

There are others components in addition to these, which we will learn about later, but for every component specified in UIMA there are two parts required for its implementation:

the declarative part and

the code part.

The declarative part contains metadata describing the component, its identity, structure and behavior and is called the Component Descriptor. Component descriptors are represented in XML. The code part implements the algorithm. The code part may be a program in Java.

As a developer using the UIMA SDK, to implement a UIMA component it is always the case that you will provide two things: the code part and the Component Descriptor. Note that when you are composing an engine, the code may be already provided in reusable subcomponents. In these cases you may not be developing new code but rather composing an aggregate engine by pointing to other components where the code has been included.

Component descriptors are represented in XML and aid in component discovery, reuse, composition and development tooling. The UIMA SDK provides tools for easily creating and maintaining the component descriptors that relieve the developer from editing XML directly. This tool is described briefly in Chapter 1, Annotator and Analysis Engine Developer's Guide, and more thoroughly in Chapter 1, Component Descriptor Editor User's Guide .

Component descriptors contain standard metadata including the component's name, author, version, and a reference to the class that implements the component.

In addition to these standard fields, a component descriptor identifies the type system the component uses and the types it requires in an input CAS and the types it plans to produce in an output CAS.

For example, an AE that detects person types may require as input a CAS that includes a tokenization and deep parse of the document. The descriptor refers to a type system to make the component's input requirements and output types explicit. In effect, the descriptor includes a declarative description of the component's behavior and can be used to aid in component discovery and composition based on desired results. UIMA analysis engines provide an interface for accessing the component metadata represented in their descriptors. For more details on the structure of UIMA component descriptors refer to Chapter 2, Component Descriptor Reference.

2.4. Aggregate Analysis Engines

Key UIMA Concepts Introduced in this Section:

Aggregate Analysis Engine, Delegate Analysis Engine, Tightly and Loosely Coupled, Flow Specification, Analysis Engine Assembler

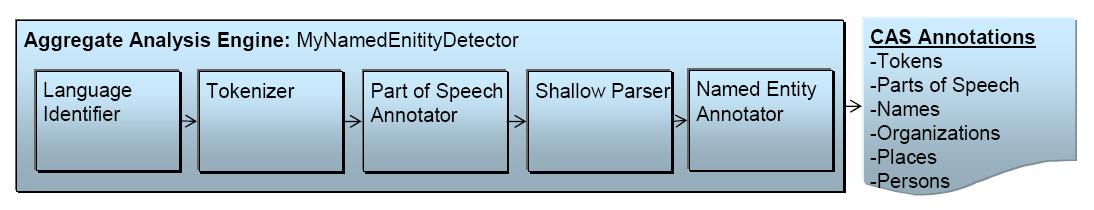

A simple or primitive UIMA Analysis Engine (AE) contains a single annotator. AEs, however, may be defined to contain other AEs organized in a workflow. These more complex analysis engines are called Aggregate Analysis Engines.

Annotators tend to perform fairly granular functions, for example language detection, tokenization or part of speech detection. These functions typically address just part of an overall analysis task. A workflow of component engines may be orchestrated to perform more complex tasks.

An AE that performs named entity detection, for example, may include a pipeline of annotators starting with language detection feeding tokenization, then part-of-speech detection, then deep grammatical parsing and then finally named-entity detection. Each step in the pipeline is required by the subsequent analysis. For example, the final named-entity annotator can only do its analysis if the previous deep grammatical parse was recorded in the CAS.

Aggregate AEs are built to encapsulate potentially complex internal structure and insulate it from users of the AE. In our example, the aggregate analysis engine developer acquires the internal components, defines the necessary flow between them and publishes the resulting AE. Consider the simple example illustrated in Figure 2.3, “Sample Aggregate Analysis Engine” where “MyNamed-EntityDetector” is composed of a linear flow of more primitive analysis engines.

Users of this AE need not know how it is constructed internally but only need its name and its published input requirements and output types. These must be declared in the aggregate AE's descriptor. Aggregate AE's descriptors declare the components they contain and a flow specification. The flow specification defines the order in which the internal component AEs should be run. The internal AEs specified in an aggregate are also called the delegate analysis engines. The term "delegate" is used because aggregate AE's are thought to "delegate" functions to their internal AEs.

In UIMA 2.0, the developer can implement a "Flow Controller" and include it as part of an aggregate AE by referring to it in the aggregate AE's descriptor. The flow controller is responsible for computing the "flow", that is, for determining the order in which of delegate AE's that will process the CAS. The Flow Contoller has access to the CAS and any external resources it may require for determining the flow. It can do this dynamically at run-time, it can make multi-step decisions and it can consider any sort of flow specification included in the aggregate AE's descriptor. See Chapter 4, Flow Controller Developer's Guide for details on the UIMA Flow Controller interface.

We refer to the development role associated with building an aggregate from delegate AEs as the Analysis Engine Assembler .

The UIMA framework, given an aggregate analysis engine descriptor, will run all delegate AEs, ensuring that each one gets access to the CAS in the sequence produced by the flow controller. The UIMA framework is equipped to handle different deployments where the delegate engines, for example, are tightly-coupled (running in the same process) or loosely-coupled (running in separate processes or even on different machines). The framework supports a number of remote protocols for loose coupling deployments of aggregate analysis engines, including SOAP (which stands for Simple Object Access Protocol, a standard Web Services communications protocol).

The UIMA framework facilitates the deployment of AEs as remote services by using an adapter layer that automatically creates the necessary infrastructure in response to a declaration in the component's descriptor. For more details on creating aggregate analysis engines refer to Chapter 2, Component Descriptor Reference The component descriptor editor tool assists in the specification of aggregate AEs from a repository of available engines. For more details on this tool refer to Chapter 1, Component Descriptor Editor User's Guide.

The UIMA framework implementation has two built-in flow implementations: one that support a linear flow between components, and one with conditional branching based on the language of the document. It also supports user-provided flow controllers, as described in Chapter 4, Flow Controller Developer's Guide. Furthermore, the application developer is free to create multiple AEs and provide their own logic to combine the AEs in arbitrarily complex flows. For more details on this the reader may refer to Section 3.2, “Using Analysis Engines”.

2.5. Application Building and Collection Processing

Key UIMA Concepts Introduced in this Section:

Process Method, Collection Processing Architecture, Collection Reader, CAS Consumer, CAS Initializer, Collection Processing Engine, Collection Processing Manager.

2.5.1. Using the framework from an Application

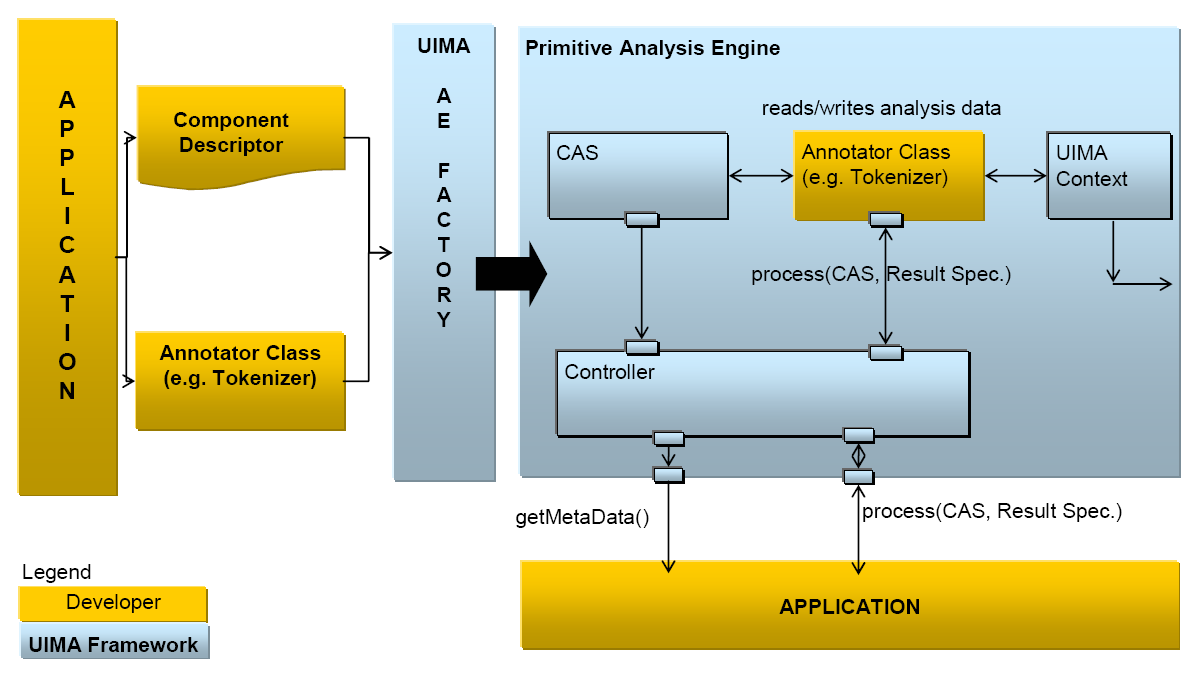

As mentioned above, the basic AE interface may be thought of as simply CAS in/CAS out.

The application is responsible for interacting with the UIMA framework to instantiate an AE, create or acquire an input CAS, initialize the input CAS with a document and then pass it to the AE through the process method. This interaction with the framework is illustrated in Figure 2.4, “Using UIMA Framework to create and interact with an Analysis Engine”.

The UIMA AE Factory takes the declarative information from the Component Descriptor and the class files implementing the annotator, and instantiates the AE instance, setting up the CAS and the UIMA Context.

The AE, possibly calling many delegate AEs internally, performs the overall analysis and its process method returns the CAS containing new analysis results.

The application then decides what to do with the returned CAS. There are many possibilities. For instance the application could: display the results, store the CAS to disk for post processing, extract and index analysis results as part of a search or database application etc.

The UIMA framework provides methods to support the application developer in creating and managing CASes and instantiating, running and managing AEs. Details may be found in Chapter 3, Application Developer's Guide.

2.5.2. Graduating to Collection Processing

Many UIM applications analyze entire collections of documents. They connect to different document sources and do different things with the results. But in the typical case, the application must generally follow these logical steps:

Connect to a physical source

Acquire a document from the source

Initialize a CAS with the document to be analyzed

Send the CAS to a selected analysis engine

Process the resulting CAS

Go back to 2 until the collection is processed

Do any final processing required after all the documents in the collection have been analyzed

UIMA supports UIM application development for this general type of processing through its Collection Processing Architecture.

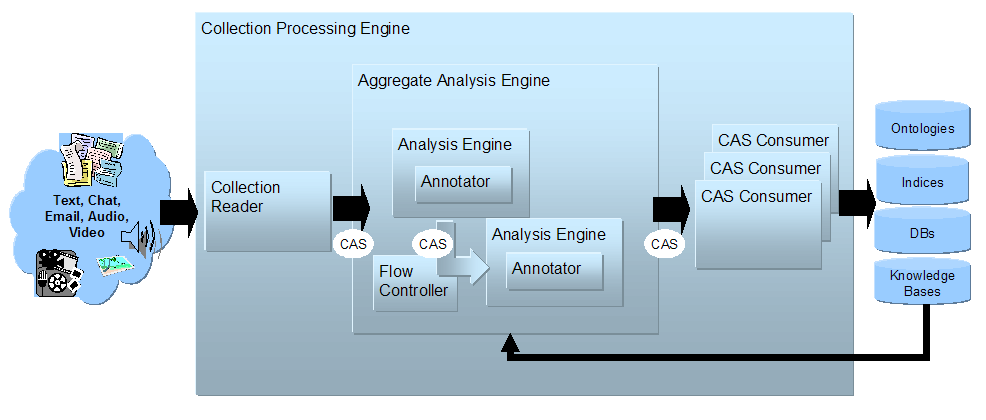

As part of the collection processing architecture UIMA introduces two primary components in addition to the annotator and analysis engine. These are the Collection Reader and the CAS Consumer. The complete flow from source, through document analysis, and to CAS Consumers supported by UIMA is illustrated in Figure 2.5, “High-Level UIMA Component Architecture from Source to Sink”.

The Collection Reader's job is to connect to and iterate through a source collection, acquiring documents and initializing CASes for analysis.

CAS Consumers, as the name suggests, function at the end of the flow. Their job is to do the final CAS processing. A CAS Consumer may be implemented, for example, to index CAS contents in a search engine, extract elements of interest and populate a relational database or serialize and store analysis results to disk for subsequent and further analysis.

A Semantic Search engine that works with UIMA is available from IBM's alphaWorks site which will allow the developer to experiment with indexing analysis results and querying for documents based on all the annotations in the CAS. See the section on integrating text analysis and search in Chapter 3, Application Developer's Guide.

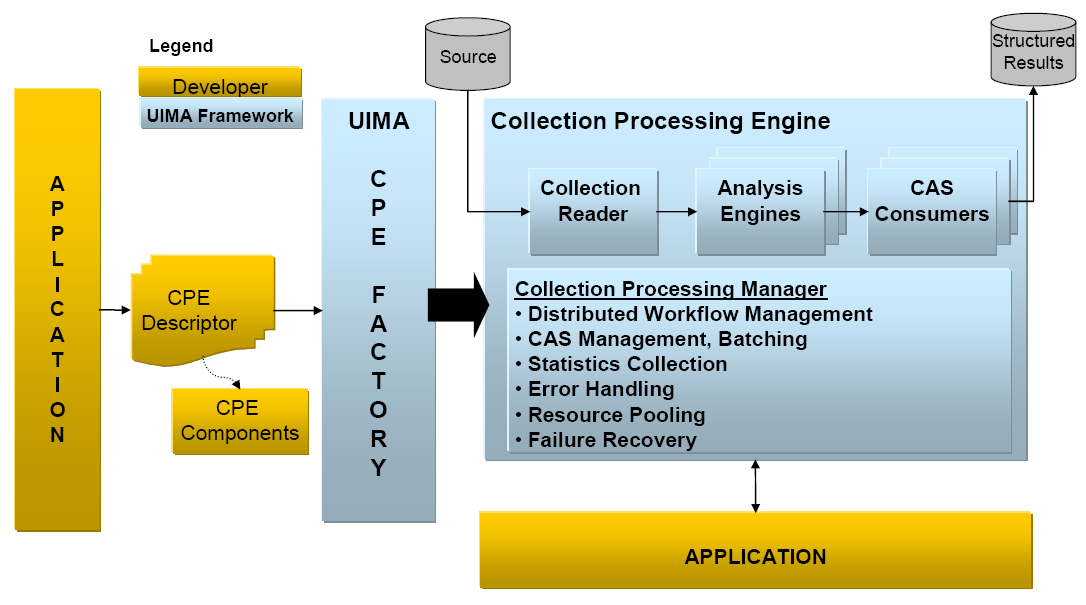

A UIMA Collection Processing Engine (CPE) is an aggregate component that specifies a “source to sink” flow from a Collection Reader though a set of analysis engines and then to a set of CAS Consumers.

CPEs are specified by XML files called CPE Descriptors. These are declarative specifications that point to their contained components (Collection Readers, analysis engines and CAS Consumers) and indicate a flow among them. The flow specification allows for filtering capabilities to, for example, skip over AEs based on CAS contents. Details about the format of CPE Descriptors may be found in Chapter 3, Collection Processing Engine Descriptor Reference.

The UIMA framework includes a Collection Processing Manager (CPM). The CPM is capable of reading a CPE descriptor, and deploying and running the specified CPE. Figure 2.5, “High-Level UIMA Component Architecture from Source to Sink” illustrates the role of the CPM in the UIMA Framework.

Key features of the CPM are failure recovery, CAS management and scale-out.

Collections may be large and take considerable time to analyze. A configurable behavior of the CPM is to log faults on single document failures while continuing to process the collection. This behavior is commonly used because analysis components often tend to be the weakest link -- in practice they may choke on strangely formatted content.

This deployment option requires that the CPM run in a separate process or a machine distinct from the CPE components. A CPE may be configured to run with a variety of deployment options that control the features provided by the CPM. For details see Chapter 3, Collection Processing Engine Descriptor Reference .

The UIMA SDK also provides a tool called the CPE Configurator. This tool provides the developer with a user interface that simplifies the process of connecting up all the components in a CPE and running the result. For details on using the CPE Configurator see Chapter 2, Collection Processing Engine Configurator User's Guide. This tool currently does not provide access to the full set of CPE deployment options supported by the CPM; however, you can configure other parts of the CPE descriptor by editing it directly. For details on how to create and run CPEs refer to Chapter 2, Collection Processing Engine Developer's Guide.

2.6. Exploiting Analysis Results

Key UIMA Concepts Introduced in this Section:

Semantic Search, XML Fragment Queries.

2.6.1. Semantic Search

In a simple UIMA Collection Processing Engine (CPE), a Collection Reader reads documents from the file system and initializes CASs with their content. These are then fed to an AE that annotates tokens and sentences, the CASs, now enriched with token and sentence information, are passed to a CAS Consumer that populates a search engine index.

The search engine query processor can then use the token index to provide basic key-word search. For example, given a query “center” the search engine would return all the documents that contained the word “center”.

Semantic Search is a search paradigm that can exploit the additional metadata generated by analytics like a UIMA CPE.

Consider that we plugged a named-entity recognizer into the CPE described above. Assume this analysis engine is capable of detecting in documents and annotating in the CAS mentions of persons and organizations.

Complementing the name-entity recognizer we add a CAS Consumer that extracts in addition to token and sentence annotations, the person and organizations added to the CASs by the name-entity detector. It then feeds these into the semantic search engine's index.

The semantic search engine that comes with the UIMA SDK, for example, can exploit this addition information from the CAS to support more powerful queries. For example, imagine a user is looking for documents that mention an organization with “center” it is name but is not sure of the full or precise name of the organization. A key-word search on “center” would likely produce way too many documents because “center” is a common and ambiguous term. The semantic search engine that is available from http://www.alphaworks.ibm.com/tech/uima supports a query language called XML Fragments. This query language is designed to exploit the CAS annotations entered in its index. The XML Fragment query, for example,

<organization> center </organization>

will produce first only documents that contain “center” where it appears as part of a mention annotated as an organization by the name-entity recognizer. This will likely be a much shorter list of documents more precisely matching the user's interest.

Consider taking this one step further. We add a relationship recognizer that annotates mentions of the CEO-of relationship. We configure the CAS Consumer so that it sends these new relationship annotations to the semantic search index as well. With these additional analysis results in the index we can submit queries like

<ceo_of>

<person> center </person>

<organization> center </organization>

<ceo_of>

This query will precisely target documents that contain a mention of an organization

with “center” as part of its name where that organization is mentioned

as part of a

CEO-of relationship annotated by the relationship

recognizer.

For more details about using UIMA and Semantic Search see the section on integrating text analysis and search in Chapter 3, Application Developer's Guide.

2.6.2. Databases

Search engine indices are not the only place to deposit analysis results for use by applications. Another classic example is populating databases. While many approaches are possible with varying degrees of flexibly and performance all are highly dependent on application specifics. We included a simple sample CAS Consumer that provides the basics for getting your analysis result into a relational database. It extracts annotations from a CAS and writes them to a relational database, using the open source Apache Derby database.

2.7. Multimodal Processing in UIMA

In previous sections we've seen how the CAS is initialized with an initial artifact that will be subsequently analyzed by Analysis engines and CAS Consumers. The first Analysis engine may make some assertions about the artifact, for example, in the form of annotations. Subsequent Analysis engines will make further assertions about both the artifact and previous analysis results, and finally one or more CAS Consumers will extract information from these CASs for structured information storage.

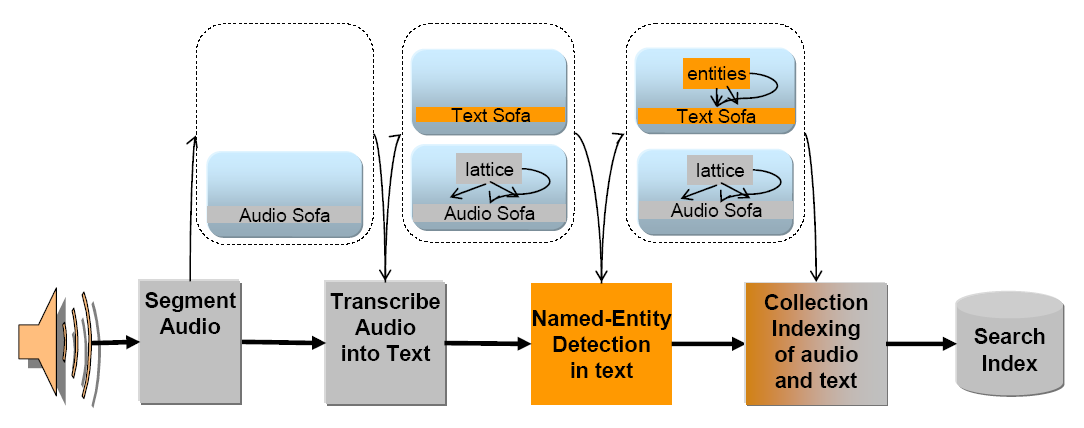

|

Figure 2.7. Multiple Sofas in support of multi-modal analysis of an audio Stream. Some engines work on the audio “view”, some on the text “view” and some on both.

Consider a processing pipeline, illustrated in Figure 2.7, “Multiple Sofas in support of multi-modal analysis of an audio Stream. Some engines work on the audio “view”, some on the text “view” and some on both.”, that starts with an audio recording of a conversation, transcribes the audio into text, and then extracts information from the text transcript. Analysis Engines at the start of the pipeline are analyzing an audio subject of analysis, and later analysis engines are analyzing a text subject of analysis. The CAS Consumer will likely want to build a search index from concepts found in the text to the original audio segment covered by the concept.

What becomes clear from this relatively simple scenario is that the CAS must be capable of simultaneously holding multiple subjects of analysis. Some analysis engine will analyze only one subject of analysis, some will analyze one and create another, and some will need to access multiple subjects of analysis at the same time.

The support in UIMA for multiple subjects of analysis is called Sofa support; Sofa is an acronym which is derived from Subject of Analysis, which is a physical representation of an artifact (e.g., the detagged text of a web-page, the HTML text of the same web-page, the audio segment of a video, the close-caption text of the same audio segment). A Sofa may be associated with CAS Views. A particular CAS will have one or more views, each view corresponding to a particular subject of analysis, together with a set of the defined indexes that index the metadata created in that view.

Analysis results can be indexed in, or “belong” to, a specific view. UIMA components may be written in “Multi-View” mode - able to create and access multiple Sofas at the same time, or in “Single-View” mode, simply receiving a particular view of the CAS corresponding to a particular single Sofa. For single-view mode components, it is up to the person assembling the component to supply the needed information to insure a particular view is passed to the component at run time. This is done using XML descriptors for Sofa mapping (see Section 6.4, “Sofa Name Mapping”).

Multi-View capability brings benefits to text-only processing as well. An input document can be transformed from one format to another. Examples of this include transforming text from HTML to plain text or from one natural language to another.

2.8. Next Steps

This chapter presented a high-level overview of UIMA concepts. Along the way, it pointed to other documents in the UIMA SDK documentation set where the reader can find details on how to apply the related concepts in building applications with the UIMA SDK.

At this point the reader may return to the documentation guide in Section 1.2, “How to use the Documentation” to learn how they might proceed in getting started using UIMA.

For a more detailed overview of the UIMA architecture, framework and development roles we refer the reader to the following paper:

D. Ferrucci and A. Lally, “Building an example application using the Unstructured Information Management Architecture,” IBM Systems Journal 43, No. 3, 455-475 (2004).

This paper can be found on line at http://www.research.ibm.com/journal/sj43-3.html