|

||||||||||

| PREV CLASS NEXT CLASS | FRAMES NO FRAMES | |||||||||

| SUMMARY: NESTED | FIELD | CONSTR | METHOD | DETAIL: FIELD | CONSTR | METHOD | |||||||||

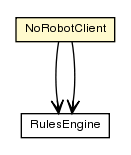

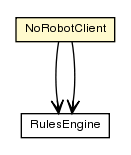

java.lang.Objectorg.apache.http.norobots.NoRobotClient

public class NoRobotClient

A Client which may be used to decide which urls on a website may be looked at, according to the norobots specification located at: http://www.robotstxt.org/wc/norobots-rfc.html

| Constructor Summary | |

|---|---|

NoRobotClient(String userAgent)

Create a Client for a particular user-agent name. |

|

| Method Summary | |

|---|---|

boolean |

isUrlAllowed(URL url)

Decide if the parsed website will allow this URL to be be seen. |

void |

parse(URL baseUrl)

Head to a website and suck in their robots.txt file. |

void |

parseText(String txt)

|

| Methods inherited from class java.lang.Object |

|---|

clone, equals, finalize, getClass, hashCode, notify, notifyAll, toString, wait, wait, wait |

| Constructor Detail |

|---|

public NoRobotClient(String userAgent)

userAgent - name for the robot| Method Detail |

|---|

public void parse(URL baseUrl)

throws NoRobotException

baseUrl - of the site

NoRobotException

public void parseText(String txt)

throws NoRobotException

NoRobotException

public boolean isUrlAllowed(URL url)

throws IllegalStateException,

IllegalArgumentException

url - in question

IllegalStateException - when parse has not been called

IllegalArgumentException

|

||||||||||

| PREV CLASS NEXT CLASS | FRAMES NO FRAMES | |||||||||

| SUMMARY: NESTED | FIELD | CONSTR | METHOD | DETAIL: FIELD | CONSTR | METHOD | |||||||||