Apache Slider: Getting Started¶

This page is updated to reflect the latest code in "develop".

Introduction¶

The following provides the steps required for setting up a cluster and deploying a YARN hosted application using Slider.

System Requirements¶

The Slider deployment has the following minimum system requirements:

-

Apache Hadoop Hadoop 2.6+

-

Required Services: HDFS, YARN and ZooKeeper

-

Oracle JDK 1.7 (64-bit)

-

Python 2.6

-

openssl

Setup the Cluster¶

Set up your Hadoop cluster with the services listed above.

Note: Ensure the debug delay config is set to a non-zero value to allow easy debugging.

If you are using a single VM or a sandbox then you may need to modify your YARN

configuration to allow for multiple containers on a single host.

In yarn-site.xml make the following modifications:

| Property | Value |

| yarn.scheduler.minimum-allocation-mb | >= 256 (ensure that YARN can allocate sufficient number of containers) |

| yarn.nodemanager.delete.debug-delay-sec | >= 3600 (to retain for an hour) |

Example

<property> <name>yarn.scheduler.minimum-allocation-mb</name> <value>256</value> </property> <property> <name>yarn.nodemanager.delete.debug-delay-sec</name> <value>3600</value> </property>

There are other options detailed in the Troubleshooting file available here.

Download Slider Packages¶

You can build it as described below.

Build Slider¶

- From the top level directory, execute

mvn clean site:site site:stage package -DskipTests - Use the generated compressed tar file in slider-assembly/target directory (e.g. slider-0.80.0-incubating-all.tar.gz or slider-0.80.0-incubating-all.zip) for the subsequent steps

- If you are cloning the Slider git repo, go to

releases/slider-0.80.0-incubatingbranch for the latest released ordevelopfor the latest under development

Install Slider¶

Slider is installed on a client machine that can access the hadoop cluster. Follow the following steps to expand/install Slider:

mkdir ${slider-install-dir} cd ${slider-install-dir}

You can run Slider app as any user. Only requirement is that the user should have a home directory on HDFS. For the reminder of the doc, it is assumed that "yarn" user is being used.

Login as the "yarn" user (assuming this is a host associated with the installed cluster). E.g., su yarn

This assumes that all apps are being run as ‘yarn’ user

Expand the tar file: tar -xvf slider-0.80.0-incubating-all.tar.gz or unzip slider-0.80.0-incubating-all.zip

Configure Slider¶

Browse to the Slider directory: cd slider-0.80.0-incubating/conf

Edit slider-env.sh and specify correct values.

export JAVA_HOME=/usr/jdk64/jdk1.7.0_67 export HADOOP_CONF_DIR=/etc/hadoop/conf

(You only need to set JAVA_HOME if it is not already set)

If you are on a node that does not have the hadoop conf folder then you can add the relevant configurations into slider-client.xml.

You can also simply configure slider-client.xml with the path to the Hadoop configuration

directory.

This can be absolute

<property> <name>HADOOP_CONF_DIR</name> <value>/etc/hadoop/conf</value> </property>

or it can be relative to the property SLIDER_CONF_DIR, which is set to the

directory containing the slider-client.xml file.

<property> <name>HADOOP_CONF_DIR</name> <value>${SLIDER_CONF_DIR}/../hadoop-conf</value> </property>

If the hadoop configuration dir is configured this way, the

For example, if you are targeting a non-secure cluster with no HA for NameNode or ResourceManager, modify Slider configuration file ${slider-install-dir}/slider-0.80.0-incubating/conf/slider-client.xml to add the following properties:

<property> <name>hadoop.registry.zk.quorum</name> <value>yourZooKeeperHost:port</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>yourResourceManagerHost:8050</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>yourResourceManagerHost:8030</value> </property> <property> <name>fs.defaultFS</name> <value>hdfs://yourNameNodeHost:8020</value> </property>

Execute:

${slider-install-dir}/slider-0.80.0-incubating/bin/slider version

OR

python %slider-install-dir%/slider-0.80.0-incubating/bin/slider.py version

Ensure there are no errors and you can see "Compiled against Hadoop 2.6.0". This ensures that Slider is installed correctly.

Deploy Slider Resources¶

Ensure that all file folders are accessible to the user creating the application instance. The example assumes "yarn" to be that user. The default yarn user may be different e.g. hadoop.

Ensure HDFS home folder for the User¶

Perform the following steps to create the user home folder with the appropriate permissions:

su hdfs hdfs dfs -mkdir /user/yarn hdfs dfs -chown yarn:hdfs /user/yarn

Create Application Packages¶

There are several sample application packages available for use with Slider:

-

app-packages/memcached-winor memcached: The README.txt describes how to create the Slider Application package for memcached for linux or windows. memcached app packages are a good place to start -

app-packages/hbaseor hbase-win: The README.txt file describes how to create a Slider Application Package for HBase. By default it will create a package for HBase 0.98.3 but you can create the same for other versions. -

app-packages/accumulo: The README.txt file describes how to create a Slider Application Package for Accumulo. -

app-packages/stormorstorm-win: The README.txt describes how to create the Slider Application package for Storm.

Create one or more Slider application packages and follow the steps below to install them.

Install, Configure, Start and Verify Sample Application¶

Install Sample Application Package¶

slider install-package --name *package name* --package *sample-application-package*

Package gets deployed on HDFS at <User home dir>/.slider/package/<name provided in the command>

This path is also reflected in appConfig.json through property "application.def" So the path and the property value must be in sync.

For example, you can use the following command for HBase application package.

slider install-package --name HBASE --package path-to-hbase-package.zip

Create Application Specifications¶

Configuring a Slider application consists of two parts: the Resource Specification, and the *Application Configuration.

Note: There are sample Resource Specifications (resources-default.json) and Application Configuration (appConfig-default.json) files in the application packages zip files.

Resource Specification¶

Slider needs to know what components (and how many components) to deploy.

As Slider creates each instance of a component in its own YARN container, it also needs to know what to ask YARN for in terms of memory and CPU for those containers.

All this information goes into the Resources Specification file ("Resource Spec") named resources.json. The Resource Spec tells Slider how many instances of each component in the application (such as an HBase RegionServer) to deploy and the parameters for YARN.

An application package should contain the default resources.json and you can start from there. Or you can create one based on Resource Specification).

Store the Resource Spec file on your local disk (e.g. /tmp/resources.json).

Application Configuration¶

Alongside the Resource Spec there is the Application Configuration file ("App Config") which includes parameters that are specific to the application, rather than YARN. The App Config is a file that contains all application configuration. This configuration is applied to the default configuration provided by the application definition and then handed off to the associated component agent.

For example, the heap sizes of the JVMs, The App Config defines the configuration details specific to the application and component instances. An application package should contain the default resources.json and you can start from there.

Ensure the following variables are accurate:

- "application.def": "application_package.zip" (path to uploaded application package)

Start the Application¶

Once the steps above are completed, the application can be started through the Slider Command Line Interface (CLI).

Change directory to the "bin" directory under the slider installation

cd ${slider-install-dir}/slider-0.80.0-incubating/bin

Execute the following command:

./slider create cl1 --template appConfig.json --resources resources.json

Verify the Application¶

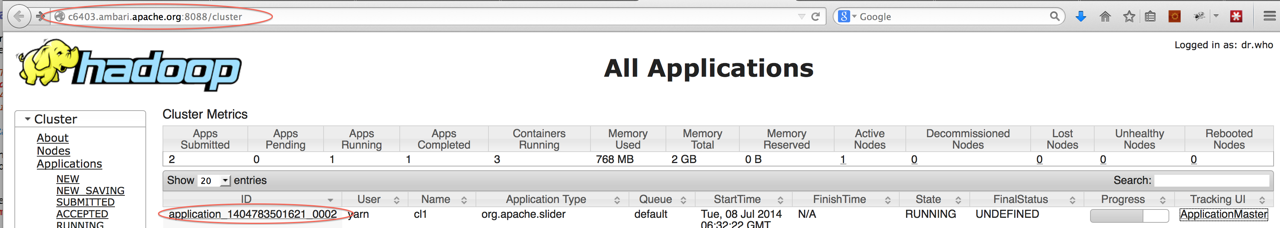

The successful launch of the application can be verified via the YARN Resource Manager Web UI. In most instances, this UI is accessible via a web browser at port 8088 of the Resource Manager Host:

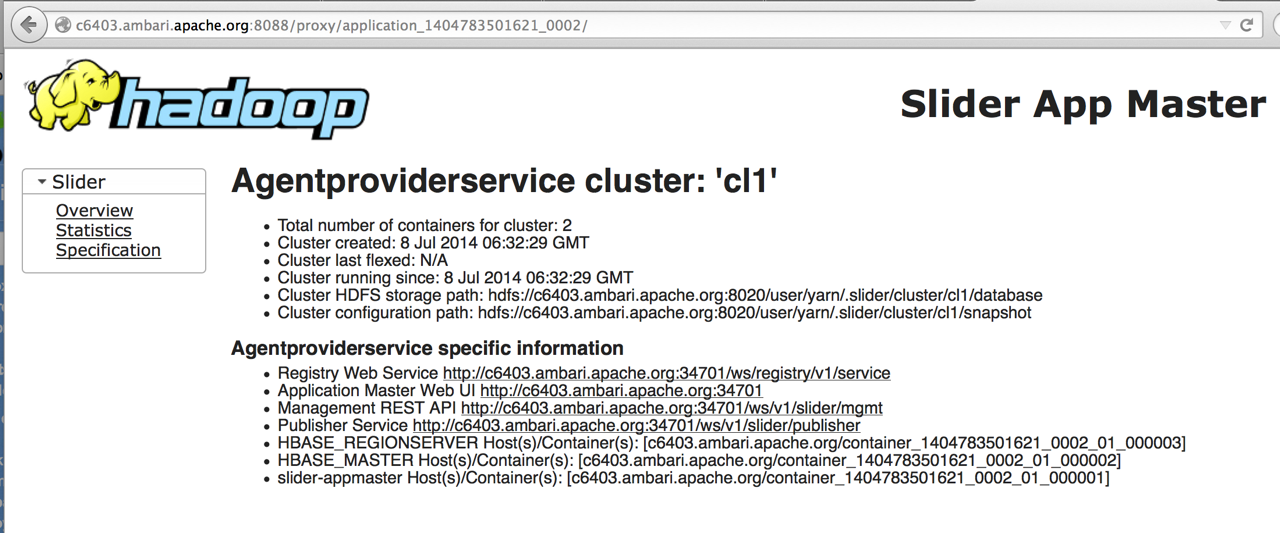

The specific information for the running application is accessible via the "ApplicationMaster" link that can be seen in the far right column of the row associated with the running application (probably the top row):

Obtain client config¶

An application publishes several useful details that can be used to manage and communicate with the application instance.

You can use registry commands to get all published data.

All published data

All published data are available at the /ws/v1/slider/publisher end point of the AppMaster (e.g. http://c6403.ambari.apache.org:34701/ws/v1/slider/publisher). Its obtainable through slider-client status <app name> where field info.am.web.url specifies the base address. The URL is also advertised in the AppMaster tracking UI.

Client configuration

Client configurations are at /ws/v1/slider/publisher/slider/<config name> where config name can be

- site config file name without extension, such as

hbase-site(e.g. http://c6403.ambari.apache.org:34701/ws/v1/slider/publisher/slider/hbase-site) where the output is json formatted name-value pairs - or, with extension such as

hbase-site.xml(http://c6403.ambari.apache.org:34701/ws/v1/slider/publisher/slider/hbase-site.xml) to get an XML formatted output that can be consumed by the clients

Log locations

The log locations for various containers in the application instance are at ws/v1/slider/publisher/slider/logfolders

{ "description": "LogFolders", "entries": { "c6403.ambari.apache.org-container_1404783501621_0002_01_000002-AGENT_LOG_ROOT": "/hadoop/yarn/log/application_1404783501621_0002/container_1404783501621_0002_01_000002", "c6403.ambari.apache.org-container_1404783501621_0002_01_000003-AGENT_LOG_ROOT": "/hadoop/yarn/log/application_1404783501621_0002/container_1404783501621_0002_01_000003", "c6403.ambari.apache.org-container_1404783501621_0002_01_000003-AGENT_WORK_ROOT": "/hadoop/yarn/local/usercache/yarn/appcache/application_1404783501621_0002/container_1404783501621_0002_01_000003", "c6403.ambari.apache.org-container_1404783501621_0002_01_000002-AGENT_WORK_ROOT": "/hadoop/yarn/local/usercache/yarn/appcache/application_1404783501621_0002/container_1404783501621_0002_01_000002" }, "updated": 0, "empty": false }

Quick links

An application may publish some quick links for the monitoring UI, JMX endpoint, etc. as the listening ports are dynamically allocated and thus need to be published. These information is available at /ws/v1/slider/publisher/slider/quicklinks.

Manage the Application Lifecycle¶

Once started, applications can be stopped, (re)started, and destroyed as follows:

Stopped:¶

./slider stop cl1

Started:¶

./slider start cl1

Destroyed:¶

./slider destroy

Flexed:¶

./slider flex cl1 --component worker 5

Appendix A: Debugging Slider-Agent¶

Create and deploy Slider Agent configuration¶

Create an agent config file (agent.ini) based on the sample available at:

${slider-install-dir}/slider-0.80.0-incubating/agent/conf/agent.ini

The sample agent.ini file can be used as is (see below). Some of the parameters of interest are:

log_level = INFO or DEBUG, to control the verbosity of log

app_log_dir = the relative location of the application log file

log_dir = the relative location of the agent and command log file

Once created, deploy the agent.ini file to HDFS (it can be deployed in any location accessible to application instance):

su yarn hdfs dfs -copyFromLocal agent.ini /user/yarn/agent/conf

Modify the --template json file (appConfig.json) and add the location of the agent.ini file.

"agent.conf": "/user/yarn/agent/conf/agent.ini"