Slider AM REST API: v2¶

This is a successor to the Slider v1 REST API

This document represents the third iteration of designing a REST API to be implemented by the Slider Application Master.

The key words "MUST", "MUST NOT", "REQUIRED", "SHALL", "SHALL NOT", "SHOULD", "SHOULD NOT", "RECOMMENDED", "MAY", and "OPTIONAL" in this document are to be interpreted as described in RFC 2119.

History¶

- Created: January 2014

Introduction and Background¶

Slider 0.60 uses Apache Hadoop IPC for communications between the Slider client and the per-instance application master, with a READ-only JSON view of the cluster, as documented in the Slider v1 REST API

Were Slider to support a read/write REST API, it would be possible to:

-

Communicate with a running AM from tools other than the slider client, such as via Curl

-

Potentially communicate with a remote Hadoop cluster via Apache Knox.

-

Offer alternative methods of constructing an application

Slider Configuration Model and REST¶

Slider's declarative view of the application to deploy fits in well with a REST world view: one does not make calls to operations such as "increase region server count by two", instead the JSON specification of YARN resources is altered such that the region server count is implemented, then the new JSON document submitted. currently this is done via IPC.

Where Slider does not integrate well with REST is

-

The requirement for the initial application setup to be performed client-side.

-

The need to interact with the YARN launcher services via RPC, building up the application to launch by uploading JAR files and building a java command line from them.

-

Having a split of three configuration documents:

internal.json,resources.jsonandapp_conf.jsonto describe the application. A split view prevents an atomic operation of updating configuration and resources. -

No support of configuration update of a running application. The content may be read, but a write is not supported (or if it is, they are not addressed until the application is next restarted).

Existing IPC API¶

The slider IPC layer uses protobuf-formatted payloads, with the Hadoop IPC layer handling security: authorization, authentication and encryption.

service SliderClusterProtocolPB { rpc stopCluster(StopClusterRequestProto) rpc flexCluster(FlexClusterRequestProto) rpc killContainer(KillContainerRequestProto) rpc amSuicide(AMSuicideRequestProto) rpc getJSONClusterStatus(GetJSONClusterStatusRequestProto) rpc getInstanceDefinition(GetInstanceDefinitionRequestProto) rpc listNodeUUIDsByRole(ListNodeUUIDsByRoleRequestProto) rpc getNode(GetNodeRequestProto) rpc getClusterNodes(GetClusterNodesRequestProto)

Only four operations are state transforming: stop, flex, kill-container and amSuicide; the latter two purely implemented for testing. The flex cluster is the sole state changing operation with any payload other than a text message for logging/diagnostics:

message FlexClusterRequestProto { required string resources = 1; }

The remaining operations are to query the system, allowing the caller to query

-

the defined state of the cluster (the

InstanceDefinitionstructure being the aggregate of internal, resources, and application JSON) -

IDs of containers in a specific role, and the details of one or more nodes, as listed by ID. The split of listing IDs and requesting details is to address scale.

Note that for a "version robust" marshalling format, Protobuf is (a) hard to work with in Java and (b) very hard to examine at the payload layer in Java (e.g. to enumerate elements which were not known at compile time) and (c) due to Google's lack of backwards compatibility in libraries and generated code, very brittle in the Java source.

Use Cases of an AM REST API¶

Here are the possible different cases of a Slider REST API.

Each one has different requirements —so the priority of supporting different use cases will scope and direct effort

Command Line tooling¶

Direct communication with Slider via curl, wget, Python, and other lightweight tooling, rather than exclusively via the Java Slider JAR

-

Authentication must be in tools (

curl --negotiate) -

Callers will still need the Java application installed to launch the Slider AM.

For this use case, we need to be very clear about what we are trying to do and why, rather than just "because". Being able to update the application state could be the most compelling example, a simple POST to flex the cluster size.

Another use here could be workflow operations, such as scripts to start and stop applications.

Requirements

-

REST API usable from tooling supporting the desired set of operations.

-

Scripts may need to have the ability to block on an operation until the application reaches a desired state (e.g containers match requested count)

Web UI¶

The (currently minimal) Slider Web UI could forward operations the REST API via HTML/HTML5 forms.

The state of the application could be presented better than it is today.

It would also be possible to build a more complex web application that that offered by slider today.

Given that the server-side slider application has access to all the data already collected in the Slider AM and potentially offered by a REST API, providing a better view of this information does not require a REST API and sophisticated JS code in the browser: the application could be directly improved.

What would be novel is the ability of the client to change state:

Requirements

- Support for HTML form submission required.

Management tooling¶

The example of this is Apache Ambari, but it is not restricted to this program; Ambari is merely a representative example of "a web application launching and controlling an application via slider, on behalf of users".

We know today that such applications built in Java do not need a REST API; the slider client itself can be used for this.

What a REST API could do is

-

Decouple the app from versions of the slider client.

-

Potentially retrieve information better.

-

Provide a better conceptual model for operations.

-

Allow access to metrics which are not exposed via the IPC API.

One caveat here is that as the communications will be via the YARN RM proxy, operations which are currently direct management app-to-slider are now proxied. this may have different latencies and failure modes.

Requirements

-

Must be able to impersonate actual user of app.

-

Can still use direct IPC to registry/ZK, YARN.

-

May prefer subscription to events rather than polling.

-

Detailed access to state of application and containers.

-

May want more slider application metrics.

Long-haul Client¶

A long-haul clients is probably the most complex client application. It can be probably be done within the slider client codebase, so allowing remote application creation and manipulation.

Remote cloud deployments are a key target here —so we cannot expect the cluster's HDFS storage to be persistent over time. Instead we must keep persistent data (packages, JSON configurations) in the platform's persistent store (amazon S3, Azure AVS, etc). YARN node managers do already "localize" resources served up this way; persisting application state may be more complex if the consistency model of the object store does not match that of HDFS.

Requirements

-

Full YARN REST API client to replace YARN's YarnClientImpl classes used in Slider today.

-

REST API in slider to replace the existing IPC channel

-

Apache Knox routing of slider REST calls to YARN RM proxy

-

Remote read-only registry access via Apache Knox

-

Apache Knox publishing of slider Web UI

-

Apache Knox publishing of HTTP endpoints (REST, Web) exported via deployed applications.

-

Slider to explicitly publish application endpoints in the YARN service registry

-

And/or Applications to explicitly publish their endpoints in the YARN service registry.

-

Compatible authentication

-

Package uploads and YARN resource submissions to be to the persistent data store rather than transient HDFS storage

-

Persistent application instance configuration to be in the persistent data store rather than transient HDFS storage.

Functional Testing¶

Slider uses the slider-client as an in-VM library during its minicluster unit tests.

For functional testing, it uses the slider CLI as an external application. This guarantees full testing of the CLI, including the shell/python scripts themselves. It was this testing which picked up some problems with the python script on windows, and a later regression related to accumulo. It also forces us to ensure that the return codes of operations differentiate between different failure causes, rather than providing a simple "-1" error indicating that an operation failed. Our exit codes are now something which may be used for support and debugging.

A REST API could also be used for testing, though not, for the reasons above, by slider itself, except in the special case of functional tests of the REST API itself.

Where it could be of use is functional testing of slider-deployed applications. These are less likely to use the Bigtop/slider test runner, and may be in different languages. A REST API would permit test runners in different languages to manipulate the application under test: trigger container failures, stop the application, etc.

Even here, having a per-platform/per-language test library will aid development. Alongside the Java client, libraries in python, go and C# are likely to cover a broad set of test runners.

In the Slider code, there is a lot of logic related to spinning waiting for a cluster to change state —and report failures meaningfully if not. There is usually a sequence of

-

Poll/wait loop awaiting the slider cluster operations to complete within a bounded time. As well as cluster expansion to the desired size, flex up/down and failure recovery are tested.

-

Poll/wait loop awaiting the deployed application itself to go live within a bounded time.

Once condition #2 is met, functional tests on the application can begin.

This sequence is recurrent enough that at least the slider startup phase should be automated in the client libraries, or possibly even a specific API call which allows an operation to block until a specific cluster state or a timeout.

What slider cannot do is offer an operation to block until an application is live —not until/unless we add liveness checks.

-

Test-centric library for test platform

-

API calls to provide detailed diagnostics on problems

-

API calls to change application state, including triggering failures of containers and the application master.

-

API calls to probe for state (ideally blocking)

Deployed Application¶

This is a use case which came from the Storm team: give the application the ability to talk to Slider and so query and manipulate its own deployment.

This allows the application to expand and contract itself based on perceived need, and to explicitly release specific components which are no longer required. It can also expose the YARN cluster details to the application, so allowing the deployed application to build a model of the YARN cluster without talking directly to it.

In this design, the Slider AM's REST API is no longer for clients of the application, or even management tools —it becomes the API by which deployed applications integrate with YARN. To use the current fashionable terminology, it becomes a "microservice" rather than a library.

Requirements

-

In-cluster API for talking to the AM.

-

Detailed queries of state of running application (enumerating components & locations)

-

Ability to query topology of YARN cluster/queue itself. e.g. labelled nodes and capacity, rack topology.

-

Ability to request component instances on specific nodes —and with specific port bindings. Mandating the port bindings can ensure that client applications can retain existing bindings.

-

Ability to blacklist specific nodes and have this forwarded to YARN. (+ query, reset blacklist if in YARN APIs)

-

Ability to query/manipulate registry and quicklinks. (This can be done directly by the YARN registry anyway; it's not clear we need to add above and beyond a REST binding for the registry).

-

Ability to query status of outstanding requests —and to cancel them.

-

Ability to query recent event history.

API Principles: High Rest with Asynchronous state changes¶

URIs for overall and detailed access¶

Resources SHOULD use URIs over ? parameters or arguments within the body.

-

DELETE operations MAY support optional ? parameters.

-

GET operations MAY support optional parameters, when certain conditions are met

-

The parameter does not fit logically into a resource URI. Example, "timeout"

-

There is no standard HTTP header which can be used.

-

Or: support for HTML forms is desired

-

Use and generate standard HTTP Headers when possible¶

If there is a standard HTTP header for an option (such as a range: header), it MUST be used. This boosts compatibility with browsers and existing applications.

The services MUST return information that defines cache duration of retrieved data, possibly 0 seconds. Proxy caching MUST be disabled. (this comes for free with the NoCacheFilter —tests are needed to verify the filter is adding the values)

GET for queries —and only queries¶

-

All side-effect free queries MUST be implemented via GET operations.

-

State changing operations MUST NOT be implemented in GET operations.

Rule #1 is for a coherent REST API. Rule #2 is mandated in the HTTP specification, and assumed to hold by those browsers which perform pre-emptive fetching.

PUT for overwrites to existing resources, or explicit creation of new ones.¶

If a URL references a valid resource, and an update to it makes sense (e.g. overwriting an existing resource topology with a new declaration), then the PUT verb SHOULD be preferred to POST.

It MAY also be used for resource creation operations —but only if the result of the PUT is a new resource at the final URL specified.

PUT operations MUST be idempotent¶

If a PUT operation is repeated, the final state of the model MUST be the same.

Processing of the initial PUT may result in external/visible actions. These actions SHOULD NOT be repeated when the second PUT is received. As an example, a PUT, twice, of a new resources.json model should eventually result in the final resource counts matching the desired state, without more container creation and deletion than if a single PUT had occurred.

Operations which aren't idempotent MUST NOT be implemented as PUT; instead they

DELETE for resource deletion operations¶

If resources are to be deleted, then DELETE is the operation of choice.

POST operations for system state changes that do not match a resource model.¶

Operations which do not map directly the resource model SHOULD be implemented as POST operations.

POST operations MAY be non-idempotent¶

There is no requirement for POST operations to be idempotent.

Payloads SHOULD be JSON payloads¶

The bodies of operations SHOULD be JSON.

POST operations MAY ALSO support application/x-www-form-urlencoded, so as to handle data directly from an HTML form.

Errors MUST be meaningful¶

If an operation fails enough information should be provided to aid diagnosis of the problem.

-

The error code MUST match the conventional value (i.e. not a generic SOAP-style 500 error)

-

The body of the response MUST be meaningful, possibly including stack traces, host information, connection information, etc.

-

We have to make sure that the length of the response is > 512 bytes to stop Chrome adding its own "helpful" error text.

Jersey is going to interfere here with its own exception logic; methods must catch all exceptions and convert them to WebAppException instances to avoid them being mishandled.

The API MUST be Secure¶

The REST API must be secure. In the context of a YARN application, this means all communications in a secure cluster must be via the Kerberos/SPNEGO-authenticated ResourceManager proxy.

Development time exception : disable the proxy on the /ws/ path of the web application, so that the full set of HTTP verbs can be used, without depending on Hadoop-2.7 proxy improvements.

Minimal¶

Features implemented via HDFS and YARN SHOULD NOT be re-implemented in the Slider AM REST API.

Asynchronous Actions and state-changes¶

All state changes are asynchronous, serialized and queued within the AM.

This is what happens today; there is some optimisation for handling multiple cluster-size changing events in the queue such that a "review and request containers" operation is postponed until all pending size-changing events (flex, container-loss, ...) have been processed.

This means that while REST operations (and YARN events) are queued in the order of receipt, some operations —such as a flex operation— may not have any work performed while later events arrive in the queue.

The response code to an asynchronous operation SHOULD be 201, ACCEPTED

YARN dependencies¶

What do we need from YARN?

-

Redirect of HTTP verbs from AM to RM proxy via a 307 "retry same operation" response.

-

Passthrough of all HTTP verbs in RM Proxy

-

RM HA proxy to redirect from standby to primary RM with 307

-

Ideally: OPTIONS verbs to list available operations (somewhat superfluous)

-

No-interference in output of errors if the content is not in text/html format.

-

For a pure-REST client, a RESTy registry API in both YARN and KNOX.

Resource Structure¶

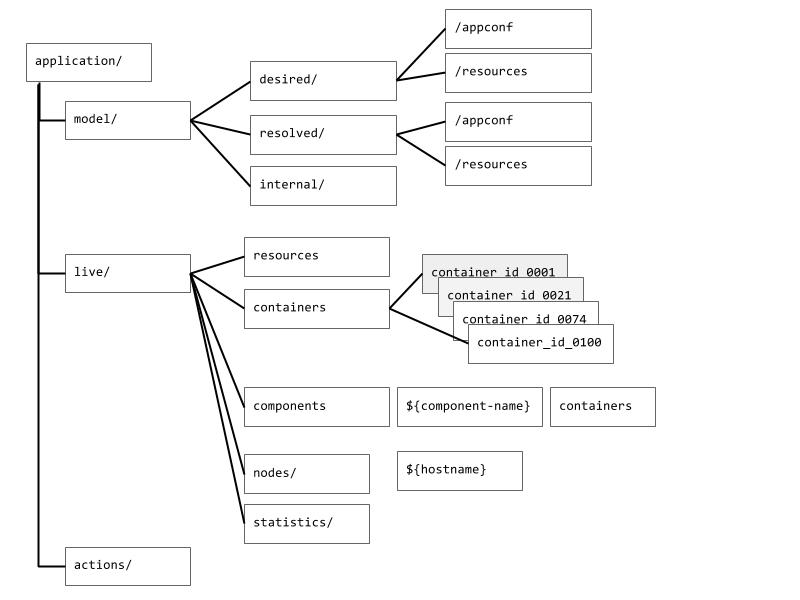

Core concepts:

-

the model of what is desired under

/application/model. This will present a hierarchical view of the desired state and the "resolved" view —the one in which the inheritance process has been applied -

The live view of what is going on in the application under

/application/model.

/application¶

All Application resources¶

All entries will be under the service path /application, which itself is under the /ws/v1/ path of the Slider web interface.

/application/model/ :¶

GET and, for some URLs, PUT view of the specification¶

/application/model/desired/¶

This is where the specification of the application: resources and configuration, can be read and written.

-

Write accesses to

resources/trigger a flex operation -

Write accesses to

configurationwill only take effect on a cluster upgrade or restart

/application/model/resolved/¶

The resolved specification, the one where we implement the inheritance, and, when we eventually do x-refs, all non-LAZY references. This lets the caller see the final configuration model.

/application/model/internal/¶

Read-only view of internal.json. Exported for diagnostics and completeness.

/application/live/ :¶

GET and DELETE view of the live application¶

This provides different views of the system, something which we can delve into

-

total list of all containers by ID:

/application/live/containers -

retrieval of a container's specifics

/application/live/containers/{container_id} -

DELETE will support decommission of a container and recommission

-

listing of component state: desired, actual, outstanding requests, YARN attributes

-

listing of containers by component type

-

listing of nodes known about and containers in each DELETE node_id will decommission all containers on a node

-

history: placement history

-

"system" state: AM state, outstanding requests, upgrade in progress

/application/actions¶

POST state changing operations¶

These are for operations which are hard to represent in a simple REST view within the AM itself.

Proposed State Query Operations¶

All of these are GET operations on data that is not directly mutable

| Path | Data |

| live/ | list of child paths |

| live/resources | desired/resources.json extended with statistics of the actual pending, and failed resource allocations. |

| live/containers | sorted list of container IDs |

| live/containers/{container_id} | details on a specific container: ContainerInfo |

| live/containers/{container_id}/logs | maybe: 302 to YARN log dir |

| live/components/ | list of components and summary data |

| live/components/{component} | Info on a specific component, including list of containers |

| live/components/{component}/instances/{index} | Index of containers; map to contents of container ID. |

| live/components/{component}/containers/ | List of container IDs for a named component type |

| live/components/{component}/containers/{container_id} | ContainerInfo |

| live/nodes/ | List of known nodes in cluster |

| live/nodes/${nodeid} | Node info on a node (e.g history containers) |

| live/liveness | aggregate liveness information |

| live/liveness/{component} | Liveness information for a named component type |

| live/statistics | General statistics |

All live values will be cached and refreshed regularly; the caching ensures that a heavy load of GET operations does not overload the application master. It does mean that there may be a delay of under a second before an updated value is visible.

Actions¶

Actions are POST operations.

| action/stop | Stop the application |

| action/upgrade | Rolling upgrade of the application |

| action/ping | Simple ping operation (which also takes PUT & DELETE and any other verb). It can be used to verify passthrough of HTTP POST/PUT/DELETE operations |

We could model this differently, with an "/operation" URL to which you PUT/GET an operation, DELETE to cancel (if permitted), but it would get contrived unless an actual queue of actions was presented.

Different operations would simply be a different operation payload.

This is different from a POST in that a GET of the URL would return details on its ongoing status, and so be important for the upgrade. In this model

-

in normal operation a GET would return a normal status "operation":""

-

when a stop is PUT, the operation is "stop" until the AM is stopped.

-

when an upgrade is PUT, the GET returns the upgrade operation, submitted parameters and progress.

-

It MAY be possible to overwrite an existing operation with a new one, though that will depend on the active operation. Specifically, "upgrade" would only support STOP; "stop" would only support "stop". the empty operation, "" will support anything

Non-normative Example Data structures¶

application/live/resources¶

The contents of application/live/resources on an application which only has an application master deployed. The entries in italic are the statistics related to the live state; the remainder the original values. ``` { "schema" : "http://example.org/specification/v2.0.0", "metadata" : { }, "global" : { }, "credentials" : { }, "components" : { "slider-appmaster" : { "yarn.memory" : "1024", "yarn.vcores" : "1", "yarn.component.instances" : "1", "yarn.component.instances.requesting" : "0", "yarn.component.instances.actual" : "1", "yarn.component.instances.releasing" : "0", "yarn.component.instances.failed" : "0", "yarn.component.instances.completed" : "0", "yarn.component.instances.started" : "1"

}

}

} ```

live/liveness¶

The liveness URL returns a JSON structure on the liveness of the application as perceived by Slider itself.

See org.apache.slider.api.types.ApplicationLivenessInformation

{

"allRequestsSatisfied": true,

"requestsOutstanding": 0

}

Its initial/basic form counts the number of outstanding container requests.

This could be extended in future with more criteria, such as the minimum number/ percentage of desired containers of each component type have been allocated —or even how many were actually running.

Any new liveness probes will supplement rather than replace the current values.

To be defined: live/statisticts¶

The statistics will cover the collected statistics on a component type, as well as aggregate statistics on an application instance.

When an Application Master is restarted, all statistics will be lost.